Scientists from the University of Cambridge have used a medley of automated text analysis and the ‘robot scientist – Eve’ to semi-automate the procedure of reproducing research results. The lack of reproducibility is one of the biggest crises facing modern science.

Scientific findings should be not only ‘replicable’ (i.e., reproducible in the same lab under the same conditions) but also ‘reproducible’ (replicable in other laboratories under similar conditions). If possible, the results should be ‘robust’ (replicable under a wide range of conditions). Only a small percentage of published biomedical outcomes have been examined for reproducibility and robustness; furthermore, it is frequently not observed when repeatability is tested. This is termed “the reproducibility crisis,” and it is one of the most pressing issues in biomedicine.

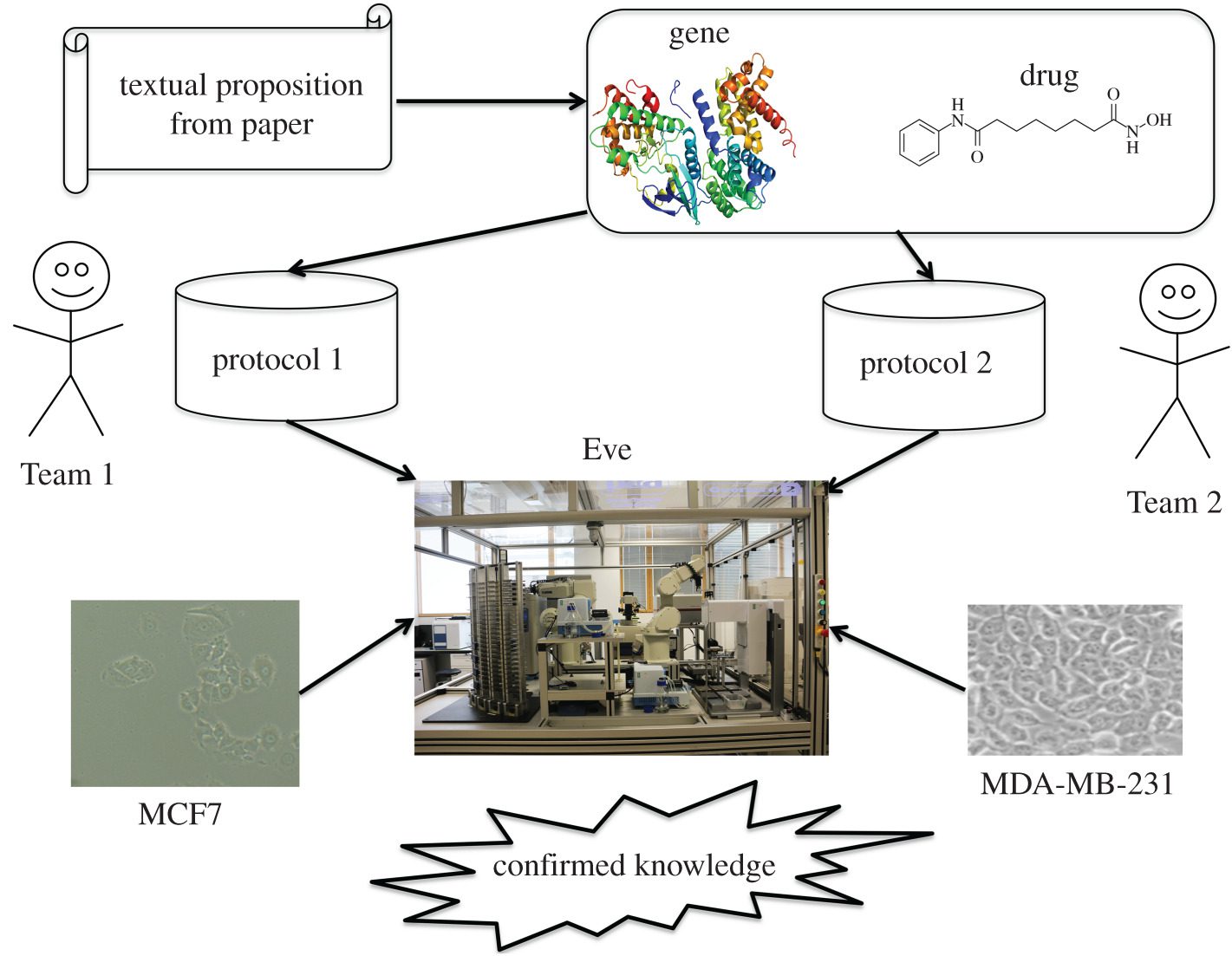

Image Source: Testing the reproducibility and robustness of the cancer biology literature by robot.

The researchers analyzed over 12,000 research papers on breast cancer cell biology. A set of 74 research papers of high scientific interest were selected, of which only less than one-third (22 papers) were found to be reproducible. Eve was able to make two instances of serendipitous discovery.

The findings, reported in the journal Royal Society Interface, show that robotics and artificial intelligence can be used to address the reproducibility crisis.

“One of the big advantages of using machines to do science is they’re more precise and record details more exactly than a human can.” Prof. Ross King, Cambridge’s Department of Chemical Engineering and Biotechnology

A successful experiment is one in which a separate scientist may accomplish the same result in a different laboratory under similar conditions. However, more than 70% of researchers have attempted and failed to replicate another scientist’s experiments, and more than half have failed to replicate some of their own.

“Good science relies on results being reproducible: otherwise, the results are essentially meaningless,” says Professor Ross King, who led the research at Cambridge’s Department of Chemical Engineering and Biotechnology. “This is particularly critical in biomedicine: if I’m a patient and I read about a promising new potential treatment, but the results aren’t reproducible, how am I supposed to know what to believe? The result could be people losing trust in science.”

A few years ago, King created the robot scientist Eve, a computer/robotic system that conducts scientific investigations using artificial intelligence (AI) approaches.

One of the significant benefits of utilizing machines to undertake science is that they are more precise and capture details more precisely than humans can. This qualifies them for the task of attempting to duplicate scientific findings.

As part of a DARPA-funded project, King and his colleagues from the United Kingdom, the United States, and Sweden devised an experiment that combines AI and robotics to help address the reproducibility crisis by teaching computers to read and understand scientific papers and Eve to try to replicate the experiments.

The team concentrated on cancer research for this study. The cancer literature is vast, yet no one ever repeats the same experiment, making reproducibility a significant concern. Despite the extensive amounts of money spent on cancer research and the sheer number of individuals impacted by cancer worldwide, repeatability is critical.

The researchers employed automated text mining techniques to extract statements relevant to a change in gene expression in response to medication treatment in breast cancer from an initial batch of over 12,000 published scientific papers. A total of 74 papers were chosen from this group.

Two human teams sought to replicate the 74 results using Eve and two breast cancer cell lines. For 43 papers, statistically significant evidence for repeatability was found, indicating that the results could be replicated under identical conditions; for 22 papers, statistically significant evidence for reproducibility or robustness was found, indicating that different scientists under similar conditions could replicate the results. In two cases, the automation yielded unexpected results.

While just 22 of the 74 publications in this study were determined to be reproducible, the researchers claim that this does not imply that the rest of the papers lack scientific reproducibility or robustness. There are many reasons why a specific finding may not be repeatable in another laboratory. The authors discovered that the most significant difference was that it matters who conducts the experiment because each individual is different.

This research suggests that automated and semi-automated procedures can assist address the reproducibility dilemma and that reproducibility should be made a standard component of the scientific process, according to King.

“It’s quite shocking how big of an issue reproducibility is in science, and it’s going to need a complete overhaul in the way that a lot of science is done. We think that machines have a key role to play in helping to fix it.”

Prof. Ross King

Story Source: Roper, K., Abdel-Rehim, A., Hubbard, S., Carpenter, M., Rzhetsky, A., Soldatova, L., & King, R. D. (2022). Testing the reproducibility and robustness of the cancer biology literature by robot. Journal of the Royal Society Interface, 19(189), 20210821. https://www.cam.ac.uk/research/news/robot-scientist-eve-finds-that-less-than-one-third-of-scientific-results-are-reproducible

Dr. Tamanna Anwar is a Scientist and Co-founder of the Centre of Bioinformatics Research and Technology (CBIRT). She is a passionate bioinformatics scientist and a visionary entrepreneur. Dr. Tamanna has worked as a Young Scientist at Jawaharlal Nehru University, New Delhi. She has also worked as a Postdoctoral Fellow at the University of Saskatchewan, Canada. She has several scientific research publications in high-impact research journals. Her latest endeavor is the development of a platform that acts as a one-stop solution for all bioinformatics related information as well as developing a bioinformatics news portal to report cutting-edge bioinformatics breakthroughs.