According to the study by Prof. Judy Gichoya of Emory University, the standard deep learning models effectively predicted the patient’s race using only diagnostic images like X-rays, CT scans, and mammograms, even when the images were heavily altered, cropped, or noised.

Studies have shown that AI deep learning models mimic the unconscious racial bias and discrimination of the humans behind the creation of such algorithms. For example, GPT-3, a powerful AI text program, composed a script with damaging preconceived notions while casting stereotypical roles. In the healthcare domain, various models have been developed to predict the sex and age of patients with chest X-Rays. Retinal scans can help AI to predict gender, age, and cardiac markers. The algorithms’ ability to predict race only using medical scans is next to impossible, even for the most qualified physicians, ensuring fairness. This level of accuracy by a computational model seems to pose a threat as the racial disparities induce misdiagnosing patients with discriminated racial ethnicities.

With such dangers in mind, the study titled “AI recognition of patient race in medical imaging: a modeling study” was published in Lancet Digital Health by Gichoya et al., which investigated how AI models recognized patients’ self-reported racial identity by using large-scale public and private medical imaging datasets. The study was conducted by researchers from four countries, including the US, Taiwan, Australia, and Canada.

Findings

The authors collected medical images such as chest x-rays, chest CT-scan, limb x-rays, and mammograms from public and private datasets with no dominant race consistency across all data. The data was then subjected to three experiments to unravel the possible mechanisms underlying AI’s racial disparity.

Three of the largest chest x-ray datasets names MXR (MIMIC-CXR dataset), CXP (CheXpert dataset), and EMX (Emory chest x-ray dataset) used and tested the model on a completely different dataset that was used to train the model. The deep learning model showed a significant ability to predict if the patient is Asian, Black, or White, only using chest x-rays.

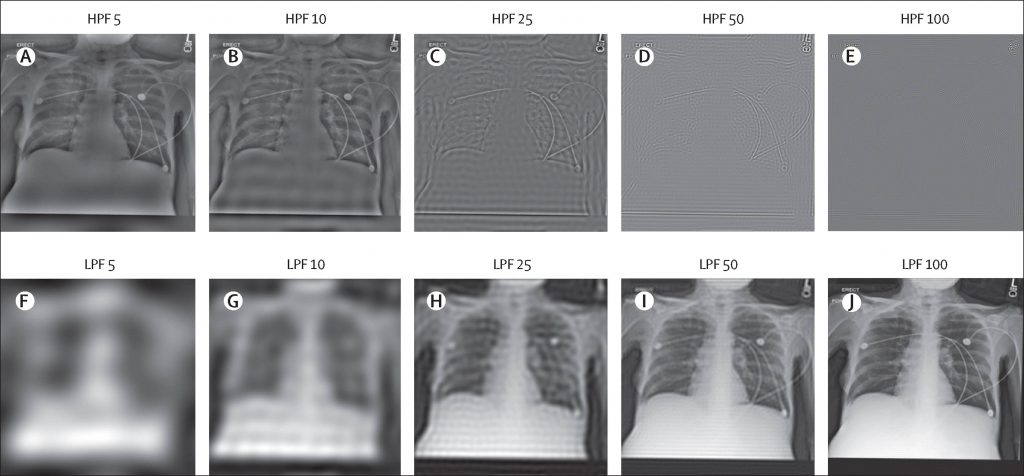

Next, they extrapolated the race prediction model to predict patients based on other medical images, such as non-chest x-rays and CT scans, to confirm if the ability of the model for race prediction is limited only to chest scans. Researchers also tried to address the behavior by looking into confounders such as the body mass index, breast density, and bone density between the races if it ultimately affected the medical images and, thus, the accuracy. More shocking results were revealed upon removing a major confounding factor, bone density, yet the predictions made by AI were still accurate. For example, black people were assumed to have high bone density; images with thicker bone density appeared white, and low density seemed gray. The researchers believed that AI uses this evidence to categorize a patient to be black. It turns out that filtering the images to mute such color variation did not affect the model’s ability to predict self-reported races accurately.

Overall, fuzzy images, high-resolution images converted to low resolution, and scans cropped to remove critical features did not significantly affect the AI’s ability to predict. The area under the curve (AUC) values of such predictions was a staggering 0.94 to 0.96.

Image Source – https://doi.org/10.1016/S2589-7500(22)00063-2

Co-author Marzyeh Ghassemi, assistant professor in Electrical Engineering and Computer Science at MIT, states that these results were initially confusing because the research team members could not identify a good proxy for this task. Even after filtering the medical images past where the images are recognizable, deep models maintained a very high performance. This concerns him because such superhuman capacities are generally much more difficult to control, regulate, and prevent from harming people.

Concluding Remarks

From the words of the lead author Dr. Judy Wawira Gichoya, to the Emory Research News, the real danger lies in the potential for strengthening race-based discrimination in the quality of care patients receive.

This study raises a question of the hour; Is AI ready to be ethically implemented in patient care? The fact that algorithms perceive race and the inability of the researchers to isolate and remove AI’s ability to predict race accurately pose an enormous risk to humanity. The authors recommend algorithm developers, regulators, and users use the information cautiously as it can lead to misdiagnoses and racial disparities in the medical field. Once the issue is sorted, where the AI is trained enough to no longer discriminate against patients and provide uniform healthcare, such machine learning models can be a viable option for the bedside.

Article Source: Reference Paper | Reference Article 1 Reference Article 2 | Code Availability: GitHub

Learn More:

Top Bioinformatics Books ↗

Learn more to get deeper insights into the field of bioinformatics.

Top Free Online Bioinformatics Courses ↗

Freely available courses to learn each and every aspect of bioinformatics.

Latest Bioinformatics Breakthroughs ↗

Stay updated with the latest discoveries in the field of bioinformatics.

Shwetha is a consulting scientific content writing intern at CBIRT. She has completed her Master’s in biotechnology at the Indian Institute of Technology, Hyderabad, with nearly two years of research experience in cellular biology and cell signaling. She is passionate about science communication, cancer biology, and everything that strikes her curiosity!