Neuroscientists from the University of Pittsburgh extend a previously developed hierarchical model of auditory categorization by including several adaptive neural mechanisms that aid auditory categorization. Humans and animals need to generalize over the vast variability of communication sounds for robust auditory perception. The variability arises at both the level of production of sound as well as the environment in which it is being produced. Sound or call categorization is thus a many-to-one mapping operation that involves categorizing a diverse set of acoustic inputs into a small number of behaviorally relevant categories. In the previous hierarchical model, the authors explored variability at the level of call production. This study explores three biologically feasible model extensions to generalize over environmental variability.

Auditory categorization: How exactly do we perceive and categorize sounds?

Humans and animals have neural circuitry in their brains devoted to distinguishing between sound categories, such as mating calls or calls for food and even danger. The wide variety of sounds that humans and animals are subjected to and the ability of the brain to successfully categorize meaningful sounds of communication, even in the presence of environmental noise, is quite remarkable. Given the amount of sound pollution we are all subjected to, our brains never fail to categorize communication sounds. How this categorization is performed by the auditory neural circuitry is a central question in auditory neuroscience.

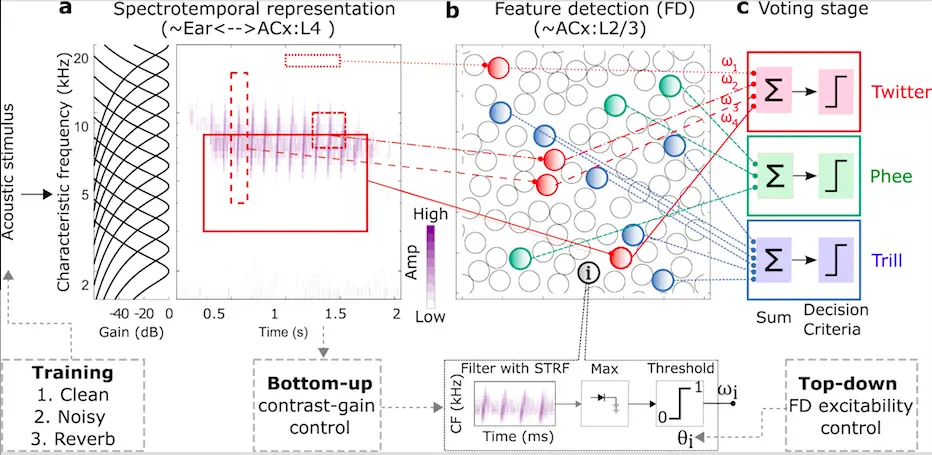

Categorization is not unique to the auditory cortex of the brain. Visual perception involves categorization based on features. Face detection algorithms make use of mid-level features by combining them. Rather than matching every face we encounter to a particular template, our brains create a mental map of facial features and their respective locations. The authors hypothesized that the categorization of communication sounds also involves constituent features that maximally distinguish between call types.

Understanding call classification: Production invariant and environment invariant

Call processing is a complex task and involves several crucial steps, viz., detection of calls in the acoustic input, classification of calls into behaviorally elegant categories, information extraction about caller identity, determining the behavioral state of the caller, and lastly, development of situational awareness of the environment. Previously, the authors have explored the critical first step of detection and classification of production-invariant call types. In the previous study, the authors tested the hypothesis that the detection of constituent features that distinguish between call types can accomplish production-invariant call classification. They found that the detection of optimal and informative mid-level features can lead to the classification of call types in a production-invariant manner.

Image Source: https://doi.org/10.1038/s42003-023-04816-z

The authors started with randomly selected marmoset call features and used a greedy search algorithm to determine the most informative and least redundant features required for the classification.

In the present article, the authors explored three biologically feasible model extensions to generalize over environmental invariability: training in degraded conditions, adaptation to sound statistics, and sensitivity adjustments at the feature detection stage.

All three mechanisms improved model performance when tested in noisy and reverberating environments. It is important to note that the trend and magnitude of improvement varied across the three mechanisms.

The above conclusions were arrived at by the authors upon performing experiments with marmosets and guinea pigs. To test if the brain responses actually correspond with the machine learning model, the authors recorded brain activity from guinea pigs who were made to listen to their kin’s calls. The authors recorded neuronal activity from brain regions responsible for auditory processing when the guinea pigs heard a noise that had features present in specific types of sounds, similar to the ML model.

The animals were next subjected to further tasks for environmental invariability classification. The guinea pigs were made to hear altered sounds by adding echoes and noise as well as altered pitches, and they found that the animals were able to detect the calls with consistency despite environmental noise. The machine learning algorithm described the underlying neural mechanisms for this task perfectly.

Conclusion

Understanding the neural mechanisms behind call classification is significant not just from an understanding-the-mechanism point of view but rather because it could significantly aid therapeutics for hearing loss. In both their previous work and the current article, the authors have extensively explored and unraveled that, similar to facial recognition, auditory classification also involves the detection of optimal features and that adaptive mechanisms facilitate auditory classification of communication calls in an environmentally invariable manner. The findings of this body of work will greatly further our understanding of the biological processes and computations involved in auditory processing and will open doors to future method development involving deep learning and machine learning techniques that could lead to improved personalized therapeutics for hearing loss.

Article Source: Reference Paper | Reference Article

Learn More:

Banhita is a consulting scientific writing intern at CBIRT. She's a mathematician turned bioinformatician. She has gained valuable experience in this field of bioinformatics while working at esteemed institutions like KTH, Sweden, and NCBS, Bangalore. Banhita holds a Master's degree in Mathematics from the prestigious IIT Madras, as well as the University of Western Ontario in Canada. She's is deeply passionate about scientific writing, making her an invaluable asset to any research team.