In a groundbreaking advancement, researchers from Stanford University have developed a speech brain-computer interface (BCI) that holds significant promise for individuals with paralysis. By capturing neural signals generated during attempted speech through intracortical microelectrode arrays, this novel BCI achieved remarkable results. A participant afflicted with amyotrophic lateral sclerosis (ALS), rendering them unable to speak coherently, achieved an impressive 9.1% word error rate with a 50-word vocabulary and a 23.8% error rate with a vast 125,000-word vocabulary. This marks the first successful demonstration of decoding speech from a large vocabulary using such technology. Notably, the BCI enabled speech decoding at a rapid pace of 62 words per minute, surpassing prior records by 3.4 times. Encouragingly, the study revealed neural patterns that facilitate accurate decoding from a small cortical region and retained detailed speech representations even after years of paralysis. These findings illuminate a promising path toward restoring efficient communication for paralyzed individuals who have lost the ability to speak.

The Neural Blueprint of Speech Production: Insights into Orofacial Movement Representation

The organization of orofacial movement and speech production within the motor cortex at a single-neuron resolution is not very well-known. To explore this, neural activity was recorded through four microelectrode arrays, two in the ventral premotor cortex (area 6v) and two in area 44, a component of Broca’s area. The participant, who had bulbar-onset ALS, exhibited restricted orofacial movement and vocalization capabilities but lacked intelligible speech. The findings revealed distinct patterns in area 6v, where strong tuning was observed across all tested movement categories.

This encompassed the successful decoding of various orofacial movements, phonemes, and words with high accuracy. In contrast, area 44, previously linked to higher-order speech aspects, exhibited negligible information related to these categories. Interestingly, speech decoding proved more precise in the ventral array, particularly during the instructed delay phase, aligning with language-associated networks identified through fMRI data.

Despite this, both ventral and dorsal arrays contained substantial information about all movement types. Notably, the study demonstrated a spatially intermixed tuning of speech articulators at the single-electrode level, with representations of jaw, larynx, lips, and tongue within the arrays. This contradicts previous suggestions of a broader somatotopic organization and emphasizes the intricate intermixing of speech-related articulators at the neuron level.

In essence, the research underscores the robust and spatially distributed nature of speech articulation representation, bolstering the feasibility of a speech brain-computer interface even in cases of paralysis and limited cortical coverage. Given the lack of speech-production information in area 44, subsequent analyses focused solely on area 6v recordings.

Cracking the Code of Attempted Speech

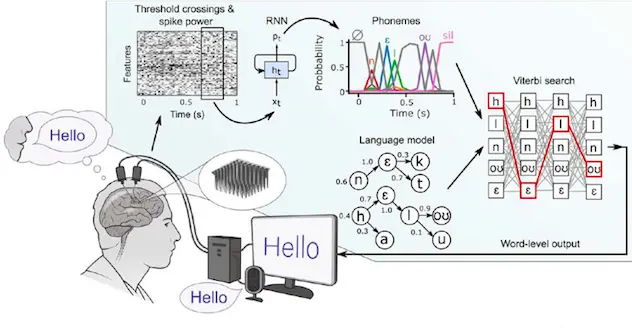

The researchers proceeded to investigate the feasibility of decoding complete sentences in real time using neural activity. Through the utilization of a recurrent neural network (RNN) decoder, probabilities of phonemes being spoken were computed in 80 ms intervals. These probabilities, along with a language model, facilitated the prediction of word sequences based on phoneme probabilities and English language statistics.

Training data encompassed a range of sentences spoken at the participant’s own pace, combined with previous days’ data, through a customized machine learning approach. The recurrent neural network’s real-time performance was then assessed on unseen sentences. During attempts at both vocalized and silent speech, the participant achieved impressive results, with a 9.1% word error rate for a 50-word vocabulary and a 23.8% error rate for a 125,000-word vocabulary.

Notably, speech decoding occurred at a remarkable pace of 62 words per minute, significantly surpassing previous brain-computer interface achievements. The recurrent neural network’s decoding often yielded sensible phoneme sequences even before language model integration. Additionally, the distribution of speech production information across electrode arrays was explored, revealing a greater contribution from the ventral 6v array.

Combining both arrays led to improved performance. Furthermore, offline analyses indicated potential enhancements by refining the language model and evaluating the decoder on temporally proximal sentences. Such findings suggest the possibility of achieving even higher decoding accuracy through improved language models and decoding algorithms adaptable to changing neural features.

The Neural Encoding of Phonemes

Exploring the neural representation of phonemes in area 6v during attempted speech, the researchers tackled the challenge of characterizing speech articulation without access to ground truth data due to the participant’s speech limitations. To decipher how phonemes were neurally encoded, the researchers examined recurrent neural network decoders, extracting saliency vectors that maximized phoneme-related recurrent neural network output probabilities.

Comparisons were drawn between the neural representation of consonants and their articulatory patterns, as gauged through electromagnetic articulography in typically speaking individuals. Impressively, a noteworthy correlation of 0.61 (p<1e-4) emerged, particularly evident when consonants were organized by place of articulation. This alignment extended to finer structures like correlated nasal consonants and dual aspects of the “W” sound. In a reduced-dimensional representation, the neural and articulatory configurations matched closely.

Turning to vowels, characterized by a two-dimensional articulatory layout, the saliency vectors mirrored this structure, reflecting neural patterns akin to articulatory similarities among vowels. Notably, the neural activity demonstrated a plane mirroring the two dimensions of vowels. Substantiating these findings through alternative approaches, the study collectively highlighted the enduring presence of an intricate articulatory code for phonemes within neural patterns, even after years of paralysis.

Optimizing Speech Brain-Computer Interfaces: Key Design Considerations for Enhanced Performance

The researchers explored three fundamental design considerations, targeting the enhancement of accuracy and usability for speech brain-computer interfaces (BCIs). They initially investigated the influence of language model vocabulary size by re-analyzing data from a 50-word set with fluctuating vocabulary sizes. The results pointed out that substantial improvements in accuracy were only maintained by smaller vocabularies (e.g., 50-100 words) compared to larger vocabulary models. The questioning of the efficacy of employing intermediate vocabulary sizes for accuracy enhancement arose when word error rates plateaued at around 1,000 words.

Secondly, the researchers investigated the relationship between the number of electrodes employed for recurrent neural network decoding and accuracy improvement. A consistent log-linear trend demonstrated that an increase in electrode counts corresponded to elevated accuracy; this implies potential gains with future intracortical devices capable of recording from a higher number of electrodes.

Lastly, the research highlighted the essentiality of employing a significant volume of daily training data (260-440 sentences), which is vital for tailoring the decoder to daily neural fluctuations. Fascinatingly, commendable performance prevailed even without retraining each day. This suggests that unsupervised algorithms could feasibly update decoders in response to gradual neural changes over time.

Conclusion

Neurological disorders like ALS often lead to severe speech impairments. Recent BCIs based on hand movement have improved typing speeds for paralysis. However, speech BCIs, although promising faster communication, need to improve with accuracy in extensive vocabulary. This study introduces a speech brain-computer interface that decodes sentences at 62 words per minute, outperforming alternative technologies. Still, challenges remain. Decoder training time, adaptability to neural changes, and microelectrode technology need refinement. Results should be validated across participants, addressing concerns about individual differences. Despite a 24% word error rate, there’s potential for improvement. Increasing channels and refining decoding algorithms could lower error rates. Language models and strategies to tackle nonstationarities showed promise in reducing errors in offline tests. Furthermore, speech articulation representation within small cortical areas highlights a feasible route for rapid communication restoration.

Article Source: Reference Paper | Reference Article

Important Note: bioRxiv releases preprints that have not yet undergone peer review. As a result, it is important to note that these papers should not be considered conclusive evidence, nor should they be used to direct clinical practice or influence health-related behavior. It is also important to understand that the information presented in these papers is not yet considered established or confirmed.

Learn More:

Neegar is a consulting scientific content writing intern at CBIRT. She's a final-year student pursuing a B.Tech in Biotechnology at Odisha University of Technology and Research. Neegar's enthusiasm is sparked by the dynamic and interdisciplinary aspects of bioinformatics. She possesses a remarkable ability to elucidate intricate concepts using accessible language. Consequently, she aspires to amalgamate her proficiency in bioinformatics with her passion for writing, aiming to convey pioneering breakthroughs and innovations in the field of bioinformatics in a comprehensible manner to a wide audience.