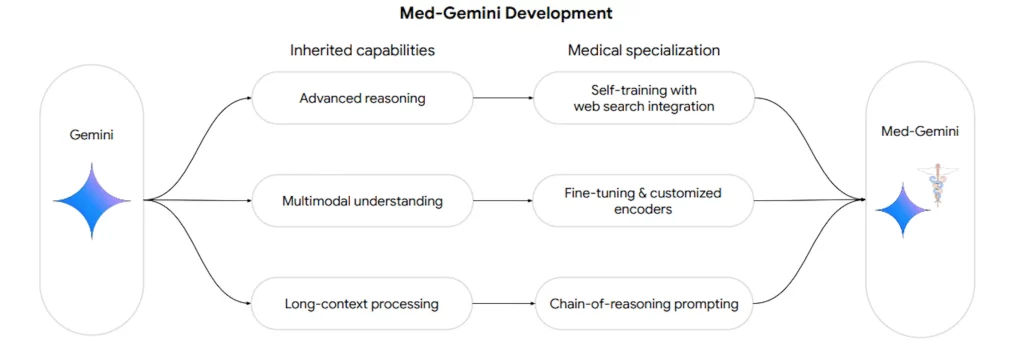

AI faces significant obstacles in achieving excellence in a wide range of medical applications since it needs sophisticated reasoning, access to current medical information, and comprehension of complicated multimodal input. With their robust general abilities in multimodal and long-context reasoning, Gemini models present fascinating opportunities in medicine. Google researchers present Med-Gemini, a family of highly capable multimodal models that are specialized in medicine and have the ability to seamlessly integrate the use of web search. By using custom encoders, these models can be effectively tailored to novel modalities, building on the fundamental strengths of Gemini 1.0 and Gemini 1.5.

Introduction

Among the many facets of medicine are the delivery of treatment plans and the proper communication of diagnoses and treatment plans by professionals. This entails knowing the patient’s medical background, remaining current on medical information, and applying multimodal reasoning from medical pictures. The art of providing care depends on sophisticated clinical reasoning, combining intricate data from several sources, and working well with other medical professionals. AI systems have the potential to support multimodal, multitask medical applications in addition to helping with particular medical activities. More intelligent and practical assistive technologies for patients and doctors would be made possible by advancing multimodal, long-context understanding and reasoning skills.

Even for complicated situations and scenarios needing specialized knowledge, large language models (LLMs) and large multimodal models (LMMs) have demonstrated promise in encoding clinical knowledge and doing well in medical question-answering benchmarks. However, because medical data is unique and safety is important, their performance is not predictive of real-world utility. Medically refined LLMs can outperform doctors in dimensions like factual accuracy, logic, harm, and prejudice by providing excellent extended responses to complex and open-ended medical queries. When creating radiology reports, LMMs such as Flamingo-CXR and Med-PaLM M are equivalent to radiologists in controlled environments. The Articulate Medical Intelligence Explorer model performs better than primary care physicians in text-based diagnostic consultations on multiple diagnostic conversation evaluation axes.

Key Features of the Study

- Researchers present Med-Gemini, a multimodal medical model family based around Gemini that is incredibly capable. Through online search integration and self-training, researchers improve the models’ capacity for clinical reasoning.

- Researchers also optimize their multimodal performance through customized encoders and fine-tuning. On ten of the fourteen medical benchmarks spanning text, multimodal, and long-context applications, Med-Gemini models attain state-of-the-art (SoTA) performance; on every benchmark where a direct comparison could be performed, they outperform the GPT-4 model family.

- The relative percentage improvements from the models over previous SoTA across the benchmarks are displayed in the bar chart. Specifically, researchers achieved a new SoTA on the MedQA (USMLE) benchmark, which is 4.6% better than the previous record (Med-PaLM 2).

- Re-analyzing the dataset with the assistance of knowledgeable doctors also reveals that 7.4% of the questions are regarded unsuitable for evaluation because they either exclude important details, offer erroneous answers, or allow for several possible interpretations.

- Researchers take these problems with data quality into consideration to better characterize the model’s performance.

- As demonstrated by their SoTA performance on several benchmarks, such as the needle-in-a-haystack retrieval from lengthy, de-identified health records and the medical video question-answering benchmarks, Med-Gemini models excel in multimodal and long-context capabilities.

- Beyond benchmarks, researchers also show the practical applications of Med-Gemini with qualitative examples of multimodal medical dialogue and quantitative evaluation on medical summarization, medical simplification, and referral letter generation tasks where the models outperform human experts.

Understanding Med-Gemini

The Med-Gemini model family is specifically designed and optimized for the medical field. Impressive demonstrations of the potential for such systems have drawn a lot of attention to the idea of generalist medical AI models. Even if the generalist approach is a worthwhile line of inquiry for medical research, practical issues necessitate trade-offs and task-specific optimizations that are mutually exclusive. Researchers do not aim to develop a generalist medical AI system in this effort. Instead, the researchers present a family of models that are individually tuned for various capacities and use cases, taking into account variables like inference delay, computing availability, and training data.

Med-Gemini is a model that builds upon Gemini’s multimodal understanding and language, and it has demonstrated enhanced performance in long-context reasoning tests. More precise, dependable, and nuanced results are obtained using web search in conjunction with an inference time uncertainty-guided search approach inside an agent framework. Consequently, the SoTA performance on MedQA (USMLE) achieves 91.1% accuracy, which is 4.6% better than the prior Med-PaLM 2 models. Additionally, generalizing to the GeneTuring benchmark and the clinico-pathological conference (CPC) cases published in the New England Journal of Medicine (NEJM), is the uncertainty-guided search technique. The way Med-Gemini performs on these criteria points to practical application, especially for tasks like clinical referral letter production and medical note summarising.

Conclusion

Large multimodal language models are opening up new avenues of research and application in medicine and health. Gemini and Med-Gemini’s capabilities point to a major advancement in the scope and depth of chances to speed up biological discoveries and improve healthcare experiences. However, improvements in model capabilities must be matched with careful consideration of these systems’ dependability and security. By giving equal weight to these two factors, researchers can responsibly imagine a future in which artificial intelligence (AI) capabilities serve as significant and secure catalysts for advancements in science and medicine.

Article Source: Reference Paper | Due to safety implications model code is not open-sourced. In due course, it will be available via Google Cloud APIs.

Important Note: arXiv releases preprints that have not yet undergone peer review. As a result, it is important to note that these papers should not be considered conclusive evidence, nor should they be used to direct clinical practice or influence health-related behavior. It is also important to understand that the information presented in these papers is not yet considered established or confirmed.

Follow Us!

Learn More:

Deotima is a consulting scientific content writing intern at CBIRT. Currently she's pursuing Master's in Bioinformatics at Maulana Abul Kalam Azad University of Technology. As an emerging scientific writer, she is eager to apply her expertise in making intricate scientific concepts comprehensible to individuals from diverse backgrounds. Deotima harbors a particular passion for Structural Bioinformatics and Molecular Dynamics.