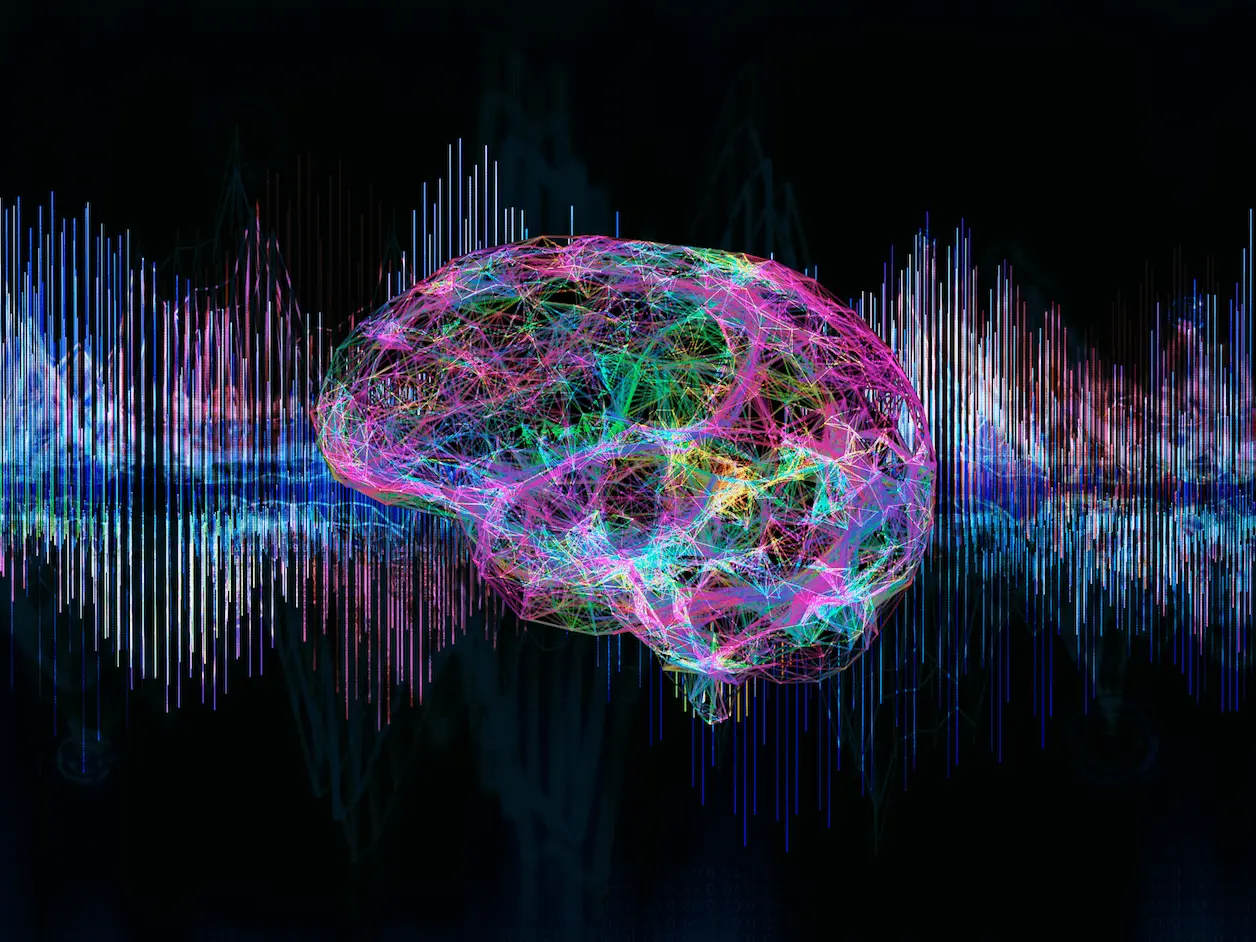

One of the primary challenges contemporary neuroscience researchers face is building a successful and accurate computational model of the various sensory systems in the body. Such a model could, in theory, replicate the outputs produced by these biological sensory systems when given identical stimuli and could help improve our understanding of how the brain functions in different situations, guiding the creation of brain-machine interfaces. A group of researchers at the Massachusetts Institute of Technology show that deep neural networks display hierarchical organization capabilities similar to those of humans, showing promise of being able to predict cortical responses to auditory stimuli.

A common approach to building these kinds of models is to provide the model with certain constraints to a task, which theoretically should allow them to replicate biological responses. With the increased interest in machine learning and artificial intelligence enabling deep neural networks to reach the same level of accuracy as a human in tasks like speech recognition and object recognition, a new generation of computational models is being created that have the capacity to replicate human behaviors in domains like vision, auditory processing, and language processing. Such neural networks have been explored to a great extent in the field of vision and visual processing and have been seen to reproduce certain aspects of primate behavior. These findings suggest that optimizing these models to carry out biologically relevant tasks will cater to their tendency to replicate the hierarchical organization systems seen in humans, thus resulting in a more accurate depiction of the brain’s behavior.

Challenges and Obstacles in the Use of Computational Models

These successes, while encouraging, also contrast with other models which differ significantly from humans in behavior. For example, some models can often be subject to adversarial perturbations – where changes are made to the given input that affect the outcomes of the model but which are otherwise not perceived by humans. Some models are also incapable of generalizing to manipulations of stimuli in the way humans are able to or produce similar outcomes to stimuli that are considered dissimilar (of different object classes) to humans. This helps highlight a potential obstacle in the creation of more advanced brain-machine interfaces.

In addition to the developments made in the domain of vision, audition is a field that has also generated a significant amount of intrigue within the machine learning space. When human and model behaviors are compared, it is often seen that neural networks can replicate human behavior patterns when they are optimized for biologically relevant tasks and given the corresponding stimulus. For example, it was found that deep neural networks trained in the classification of music and speech were able to predict the responses of the auditory cortex to sound much more accurately than standard models that used spectrotemporal filters instead. The high accuracy of predictions of neural behavior made by models trained on auditory processing tasks compared to traditional models is consistent across many different studies.

However, it should be noted that these prior studies had a smaller scope and were only able to compare and analyze a relatively small number of models, and all of them used different datasets in the course of their analysis, making a comparative analysis of these results difficult. In addition, it is still not understood if deep neural networks trained on other kinds of sound and optimized for different tasks will replicate these results or if the kind of datasets or tasks used plays a substantial role in determining the model’s accuracy.

The Use of Deep Neural Networks in Predicting Cortical Responses: The Path Ahead

In order to answer this, brain-DNN similarities were examined across a large number of computational models. Publicly available models of different kinds were tested and compared to each other, as well as new models constructed for the study. The quality of the predictions was evaluated relative to the standard baseline model of the human auditory cortex, as was the relationship between various brain regions and model stages. Different metrics were employed for this purpose, and two separate fMRI datasets were used to assess the outcomes’ robustness and reproducibility. One of these datasets had also been used in a prior study, allowing for a comparative analysis to be performed on the results obtained. Due to the problematic nature of measuring subcortical responses, only responses from the auditory cortex were used.

It was found that deep neural networks almost always produced predictions with greater accuracy as compared to the baseline model. Additionally, these models also showed a strong correspondence between different brain regions and model stages – for example, the anterior, posterior, and lateral regions of the auditory cortex were better predicted using deeper model stages. These results show that most DNN models can provide accurate predictions of neural responses compared to traditional models.

However, it is important to note that not all models were equally accurate, and some types of model performed better than others, indicating that optimization and training datasets play a significant role in affecting the outcome of the models. For example, models that were trained in noise were demonstrably better in their predictions than those that were trained in quiet. The training task these models were created for also significantly impacted the results, with models trained for multiple tasks producing the best results.

Conclusion

These results were repeated across both datasets, showing that DNNs are capable of reconstructing neural behavior when subject to the same constraints and aligning with the idea that the development of the auditory cortex was shaped by the various operations it had to perform to help with auditory processing in a setting that necessitated that it be able to perform tasks such as filtering, normalization, and pooling.

The significance of these results is that they shed light on how the brain functions and open up new avenues for research in the neurocognition field, with potential applications in the construction of new disability aids for individuals with auditory issues, as well as the creation of better brain-machine interfaces.

Article Source: Reference Paper | Reference Article | The code is available from GitHub repo: https://github.com/gretatuckute/auditory_brain_dnn/. An archived version is found at https://zenodo.org/record/8349726 (DOI.org/10.5281/zenodo.8349726).

Learn More:

Sonal Keni is a consulting scientific writing intern at CBIRT. She is pursuing a BTech in Biotechnology from the Manipal Institute of Technology. Her academic journey has been driven by a profound fascination for the intricate world of biology, and she is particularly drawn to computational biology and oncology. She also enjoys reading and painting in her free time.