Researchers affiliated with the MIT-IBM Watson AI Lab and Harvard Medical School have proposed a hypothesis of Transformer’s core computational schemes in order to decrypt the enigma of neuron-astrocyte networks and their interaction in our brain for cognitive functions and information processing through the fabrication of a computational neuron-astrocyte model that is functionally equivalent to Transformer, an AI architecture. Through this work, the researchers have underlined the unexplored neuron-astrocyte communication that is one of the most significant neurological components that mediates sturdy computational roles in the brain flexibly and ubiquitously. With an aspiration to answer whether the transformers can be built using biological computational units, the study illustrates that neuron-astrocyte networks can perhaps naturally implement the core computation performed by the Transformer blocks.

Astrocyte-Neuron Communication: Interpretation of Their Computational Functions

The intricate mechanisms of brain function are still a mystery. Even a decade ago, neuroscience studies used to put much emphasis exclusively on the neuronal connections or synapses for comprehending neurological events while the functions of the most abundant cells of the central nervous system (CNS), the astrocytes, which is a type of glial cells, was designated to have passive contributions.

The astrocytes present throughout the CNS are now gaining attention for their important contributory roles. Astrocytes are demonstrated to be responsible for regulating neuronal circuits, actively controlling synaptic transmission, and having important roles in cognitive functions like learning and memory. Neurons and astrocytes are closely interlaced as well and identified to assemble tripartite synapses consisting of a presynaptic axon, a postsynaptic dendrite, and an astrocyte process. A single astrocyte cell makes connections with thousands to millions of nearby synapses.

In this regard, the researchers address gaps in understanding the contributory computational functions, in terms of information processing and cognitive function, of the interactive networks of neurons and astrocytes and draw on the context of Transformers, a flourishing AI architecture, to elucidate the neuron-astrocyte communication.

Neuron–Astrocyte Network: The Study Demonstrates Their Correspondence to Transformers

The development of Neural Networks was inspired by biological neurons, and this AI innovation strives to replicate how neurons process information and signals. Among them, Transformers are now the default preference of neural architecture for many machine learning applications and computational neurosciences.

In contrast to conventional neural networks, which have well-assigned biological implementations, Transformers are currently at the inception stage of drawing an interpretation regarding its biological processes, mainly due to its exceptional feature of self-attention, the ability of the Transformers to learn long-range dependencies between words in a sentence without having to maintain a hidden state over long time intervals recurrently.

Considering the recent accomplishments in advancing Foundation Models and Generative AI technology in Natural Language Processing, Speech recognition, state-of-the-art performance in Vision processing by Transformers, and its architectural similarities between the hippocampus and cerebellum, as well as the representational similarities with human brain recordings, the researchers hypothesized that biological neuron–astrocyte networks could perform the core computations of a Transformer. This correspondence solves the perplexity of Transformers’ biological implementation as well.

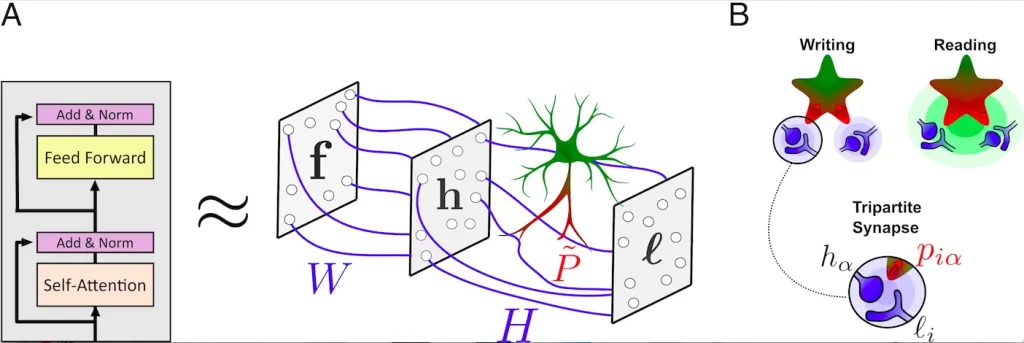

The researchers explicitly construct an artificial neuron-astrocyte network whose internal mechanics and outputs approximate those of a Transformer with high probability.

A Transformer consists of several blocks that use four operations: self-attention, feed-forward neural network, layer normalization, and skip connections. The arrangement of the operations assists the blocks in learning relationships between the tokens.

The tripartite synapse, the ubiquitous three-factor connection between an astrocyte, a presynaptic neuron, and a postsynaptic neuron, is inferred to be the principal computational element of the network. It is discussed that tripartite synapses can perform the role of normalization in the Transformer’s self-attention operation in the mathematical model that is flexible enough to approximate any Transformer. Consequently, the model would make it feasible to explicitly compare artificial representations in AI networks to representations in biological astrocyte networks.

Image Source: https://doi.org/10.1073/pnas.2219150120

Conclusion

The study demonstrates the functional equivalence of an AI architecture, Transformer, with a computational neuron-astrocyte model. This model explains the communication between astrocytes and neurons in the context of their computational roles and further elucidates why astrocytes are so plentiful in our nervous system. Additionally, the model also aims to provide a biologically plausible account of how Transformers might be implemented in the brain. Moreover, in spite of describing how to model a particular Transformer, their work shows how to approximate all possible Transformers using neurons and astrocytes.

Although the correspondence of Transformers to understand the intricate networks of neurons and astrocytes is enticing, we shouldn’t forget that the process of information acquisition in the brain is quite different from the transformer’s, which requires massive datasets; therefore, it will be inappropriate to draw an analogy of individual learning process with the training of the transformer. Nonetheless, the research illustrates unprecedented equivalency between the prevalence of astrocytes in different tasks and the success of Transformers in various computational tasks is a phenomenon. Further, the study brings anticipation of predicting astrocyte-related brain disorders with the help of the transformer.

Story Source: Reference Paper

Learn More:

Aditi is a consulting scientific writing intern at CBIRT, specializing in explaining interdisciplinary and intricate topics. As a student pursuing an Integrated PG in Biotechnology, she is driven by a deep passion for experiencing multidisciplinary research fields. Aditi is particularly fond of the dynamism, potential, and integrative facets of her major. Through her articles, she aspires to decipher and articulate current studies and innovations in the Bioinformatics domain, aiming to captivate the minds and hearts of readers with her insightful perspectives.