Scientists from the University of Southern Denmark, NaturalAntibody and Alector Therapeutics unveil nanoBERT, a nanobody-specific transformer designed to predict amino acids in specific positions within query sequences. NanoBERT outperforms human-based language models and ESM-2, demonstrating the benefits of domain-specific language modeling. Fine-tuning on thermostability datasets shows promise for engineering therapeutic nanobodies.

Nanobodies, also known as single-domain antibodies, are an emerging class of antibody-derived therapeutics offering numerous advantages over conventional antibodies. Recent computational advances like deep learning provide new opportunities to streamline nanobody engineering for therapeutic applications.

In this article, we’ll explore how transformers – a type of deep learning model – can support key aspects of nanobody design, like predicting feasible mutations. We’ll cover transformer approaches for modeling nanobody sequences, benchmarking against other methods, and potential fine-tuning applications.

Nanobodies – The Next Wave of Antibody Therapeutics

Nanobodies comprise a single peptide chain containing the binding region, unlike complete antibodies with two heavy and two light chains. This compact size provides nanobodies with unique biophysical properties like high stability, tissue penetration, and ease of engineering.

With the recent approval of the first nanobody drug, more are likely to follow. However, developing novel therapeutic nanobodies remains challenging. This is where computational methods could help expedite nanobody optimization.

Challenges in Applying Deep Learning to Nanobodies

Many computational techniques useful for antibodies could aid nanobody engineering, like structural modeling or humanization. However, differences between nanobodies and antibodies mean methods need specialized tuning for nanobodies.

Deep learning has driven progress in antibody engineering by learning from large antibody sequence datasets. However, nanobodies lack comprehensive germline gene references available for human antibodies. Without reliable gene assignment, constructing training datasets is difficult.

Nanobody hypermutation patterns also differ from canonical antibodies. These obstacles have limited the straightforward application of deep learning so far. New approaches to overcome these data challenges are needed.

Introducing NanoBERT – A Transformer for Nanobody Sequences

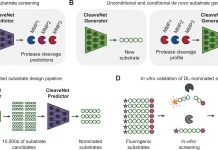

To enable deep learning on nanobodies, researchers developed nanoBERT – a transformer model adapted from natural language processing.

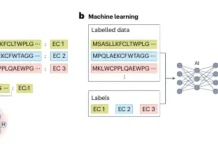

Transformers represent language by learning associations between words from large corpora. For proteins, each amino acid is a word, and the full sequence is a sentence.

NanoBERT was trained on 10 million nanobody NGS sequences to predict masked amino acids from the surrounding context, ignoring gene assignment. This avoids reliance on complete germline references.

Benchmarks Reveal Advantages of Nanobody-Specific Modeling

To assess nanoBERT’s capabilities, the authors benchmarked against general protein and human antibody-specific transformers on three tasks:

- Natural nanobody sequence reconstruction

- Human antibody sequence reconstruction

- Therapeutic nanobody sequence reconstruction

On the natural nanobody test set, nanoBERT significantly outperformed other models, achieving ~76% accuracy versus ~64% for human-based models. It also surpassed the general protein transformer.

However, the human antibody models unsurprisingly performed much better on the human dataset than nanoBERT, indicating the benefits of domain-specific language modeling.

For therapeutic nanobodies, nanoBERT still showed some advantages, correctly reconstructing more CDR residues than human models. This suggests value in nanobody-specific modeling for engineering.

Applications in Humanization and Fine-Tuning

NanoBERT could assist computational antibody humanization – identifying mutations to make immunogenic regions seem more human-like. Comparing its predictions to human and nanobody distributions can guide substitutions.

Interestingly, several current therapeutic nanobodies retain nanobody-preferred residues over human ones, highlighting ongoing uncertainties around optimizing these molecules.

Beyond sequence reconstruction, transformers can fine-tune on small task-specific datasets like experimental measurements. Though nanoBERT showed mixed results on one thermostability dataset, larger benchmarks are needed to assess transfer learning potential fully.

Limitations and Open Questions

The benchmarks revealed limitations in nanoBERT’s ability to distinguish species from sequence alone, unlike human-based models. This likely results from the far smaller training data available compared to human antibody sequencing.

With just one nanobody drug approved so far, critical immunogenicity data remains scarce. More clinical insights will be essential to properly evaluate computational strategies like transformers against real-world immunogenicity.

Future work should also determine if conclusions generalize to additional nanobody engineering problems beyond the initial tests here. Larger, standardized benchmarks will clarify where deep learning provides advantages or limitations.

Conclusion

Nanobodies are an extremely promising new modality, but designing novel therapeutics requires overcoming unique challenges. Transformer models like nanoBERT offer new routes to leverage deep learning, but domain-specific training data remains scarce.

Creative solutions combining learned and physics-based approaches may ultimately outperform pure deep learning techniques. Still, progress benchmarking nanoBERT represents an important step towards democratizing nanobody engineering through accessible computational tools.

Story source: Reference Paper | nanoBERT model is available at https://huggingface.co/NaturalAntibody/

Important Note: bioRxiv releases preprints that have not yet undergone peer review. As a result, it is important to note that these papers should not be considered conclusive evidence, nor should they be used to direct clinical practice or influence health-related behavior. It is also important to understand that the information presented in these papers is not yet considered established or confirmed.

Follow Us!

Learn More:

Dr. Tamanna Anwar is a Scientist and Co-founder of the Centre of Bioinformatics Research and Technology (CBIRT). She is a passionate bioinformatics scientist and a visionary entrepreneur. Dr. Tamanna has worked as a Young Scientist at Jawaharlal Nehru University, New Delhi. She has also worked as a Postdoctoral Fellow at the University of Saskatchewan, Canada. She has several scientific research publications in high-impact research journals. Her latest endeavor is the development of a platform that acts as a one-stop solution for all bioinformatics related information as well as developing a bioinformatics news portal to report cutting-edge bioinformatics breakthroughs.