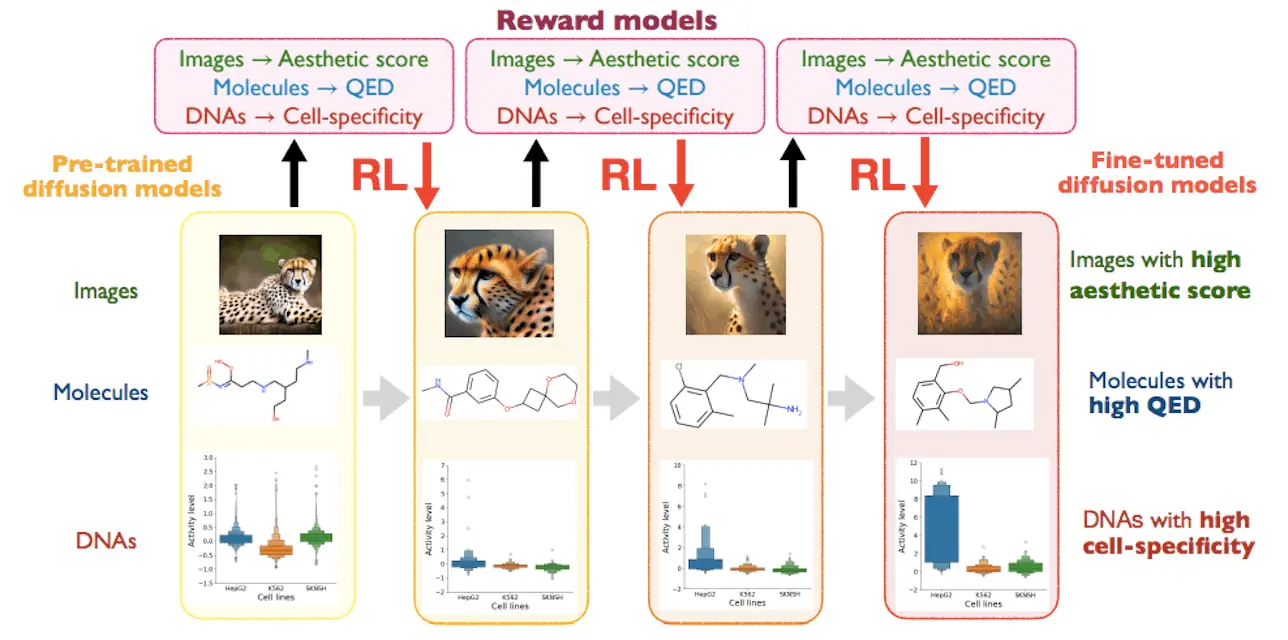

Diffusion models are versatile in generative modeling but need fine-tuning for specific applications in Biology to optimize downstream reward functions. Diffusion models are known to provide excellent generative modeling capability, but practical applications require generating samples that maximize some desired metric (such as docking score in molecules and stability in proteins).

Researchers from Genentech, Princeton University, and the University of California, Berkeley, showcase how Reinforcement Learning (RL) can be used in fine-tuning Diffusion Models. They discuss RL concepts that are utilized to optimize diffusion models for specific reward functions and various algorithms like Proximal Policy Optimization (PPO), differentiable optimization, reward-weighted Maximum Likelihood Estimation (MLE), value-weighted sampling, and path consistency learning. They explore the strengths and weaknesses of different RL-based fine-tuning algorithms across various scenarios, as well as the benefits of RL-based fine-tuning compared to non-RL-based approaches.

Introduction

Immerse yourself in the thoughts that you are a chef, and you have the best recipe for a cake. Now, you would want to slightly tweak this recipe, making it even better for a particular event, let’s say, a wedding. You might use some other flavors or change the frosting’s color to a wedding-related hue. This is what scientists and engineers do with diffusion models using reinforcement learning; they adjust them to accomplish certain objectives. (For example:- for an RL agent in a game, the downstream reward function might be the score that the agent gets in the game.)

Diffusion Models and Their Power

Diffusion models are like the master recipes in generative modeling. They are very powerful at creating realistic data by understanding the training data well and were fed. They learn from a huge database. There are many fields in which these models have applications, such as computer vision, natural language processing, biology, and chemistry.

Fine-Tuning with Reinforcement Learning

Nonetheless, while a diffusion model might indeed be quite useful for generally creating protein models, there are cases where specifically it is required to make a particular type of these models. For example, a diffusion model may require producing DNA sequences that have high cell specificity. This is where reinforcement learning is used, as it takes a more functional view of learning. Fine-tuning diffusion models using RL involves optimizing the models to perform well on specific tasks.

The Basics of Diffusion Models

Pre-trained Diffusion Models: These are models already trained on general data distributions.

Downstream Reward Functions: These are the specific goals or metrics we want the models to optimize for, such as high cell-specific expression in DNA sequences.

Reinforcement Learning Algorithms: These are the methods used to adjust the models to meet the new goals.

Key Algorithms

PPO (Proximal Policy Optimization): This algorithm gradually adjusts the model’s parameters, ensuring each change improves performance.

Differentiable Optimization: This method provides direct feedback on each parameter, allowing for precise adjustments.

Value-Weighted Sampling: This technique focuses on generating data that has historically scored high on specific metrics, refining the model based on past successes.

Path Consistency Learning: This ensures that the steps taken to adjust the model are consistent and lead to the desired outcome.

How It All Comes Together

When fine-tuning diffusion models with RL, the process involves a series of adjustments:

Start with a Pre-trained Model: Begin with a model already skilled at generating data.

Define the Reward Function: Set a specific goal, such as a high aesthetic score for images or a high QED score (Quantitative Estimate of Druglikeness) for molecules.

Apply RL Algorithms: Use algorithms like PPO or value-weighted sampling to adjust the model’s parameters, ensuring it generates data that would give higher rewards.

Evaluate and Iterate: Continuously evaluate the model’s output and fine-tune further until the desired level of performance is achieved.

Practical Applications

This fine-tuning process has significant real-world applications. For instance, in biology, fine-tuned diffusion models can generate DNA sequences with high cell-specific expression, which is crucial for targeted therapies. In chemistry, these models can create molecules with high stability and bioactivity, aiding in drug discovery.

Advantages Over Non-RL Methods

Using RL for fine-tuning offers several advantages:

Flexibility: RL algorithms can adapt to various reward functions, making them versatile across domains.

Efficiency: By focusing on maximizing specific rewards, RL fine-tuning can achieve better results faster than traditional methods.

Precision: RL allows for precise adjustments, ensuring that the fine-tuned models meet the exact needs of the application.

Conclusion

Fine-tuning diffusion models using reinforcement learning involves optimizing these models to perform well on specific tasks by applying suitable RL algorithms. By understanding and applying the right RL algorithms, researchers can guide these models to generate data that meets specific goals, unlocking new possibilities in biology, chemistry, and beyond. This approach not only enhances the performance of diffusion models but also broadens their applicability, making them powerful tools for solving complex problems.

Article Source: Reference Paper | The code is available on GitHub.

Important Note: arXiv releases preprints that have not yet undergone peer review. As a result, it is important to note that these papers should not be considered conclusive evidence, nor should they be used to direct clinical practice or influence health-related behavior. It is also important to understand that the information presented in these papers is not yet considered established or confirmed.

Follow Us!

Learn More:

Neermita Bhattacharya is a consulting Scientific Content Writing Intern at CBIRT. She is pursuing B.Tech in computer science from IIT Jodhpur. She has a niche interest in the amalgamation of biological concepts and computer science and wishes to pursue higher studies in related fields. She has quite a bunch of hobbies- swimming, dancing ballet, playing the violin, guitar, ukulele, singing, drawing and painting, reading novels, playing indie videogames and writing short stories. She is excited to delve deeper into the fields of bioinformatics, genetics and computational biology and possibly help the world through research!

[…] Optimizing Diffusion Models for Biological Applications: A Deep Dive into Reinforcement Learning-bas… […]