In recent years, the integration of artificial intelligence (AI) and machine learning (ML) into various scientific domains has significantly advanced, and chemistry is no exception. LLMs can accurately predict properties, design new molecules, optimize synthesis pathways, and accelerate drug and material discovery. Turning LLMs into agents using chemistry-specific tools such as synthesis planners and databases are the latest innovative ideas.

This article delves into the role of large language models (LLMs) and autonomous agents in revolutionizing the field of chemistry, based on a comprehensive review by Mayk Caldas Ramos, Christopher J. Collison, and Andrew D. White from the University of Rochester and the Rochester Institute of Technology.

Introduction

The evolution of ML and AI in Chemistry began from early computational methods in the mid-20th century. Significant milestones were made, such as the development of expert systems like DENDRAL in the 1980s and the rise of Quantitative Structure-Activity Relationship (QSAR) models. The 2010s brought transformative advancements with deep learning techniques, including Recurrent Neural Networks (RNNs), Convolutional Neural Networks (CNNs), and Graph Neural Networks (GNNs), applied to molecular property prediction and drug discovery. Recently, the focus has shifted to Large Language Models (LLMs) and autonomous agents, which promise to revolutionize the field by automating complex tasks, improving predictive accuracy, and enhancing data handling, thereby accelerating research and application in chemistry.

The Mechanics of Large Language Models

At the heart of LLMs is the transformer architecture, introduced by Vaswani et al. in 2017. This architecture uses a mechanism known as attention, which allows the model to weigh the importance of different parts of the input data, which makes it very effective for various tasks.

Let’s take an example:-

“The weather’s splendid today. Let’s go and eat something outside our campus.”

In this sentence, the transformer needs to understand that ‘something’ is more closely related to ‘eat’ rather than ‘campus.’ This is where attention helps us.

In chemistry, LLMs can interpret chemical languages such as SMILES (Simplified Molecular Input Line Entry System) to perform functions like molecule generation and reaction prediction.

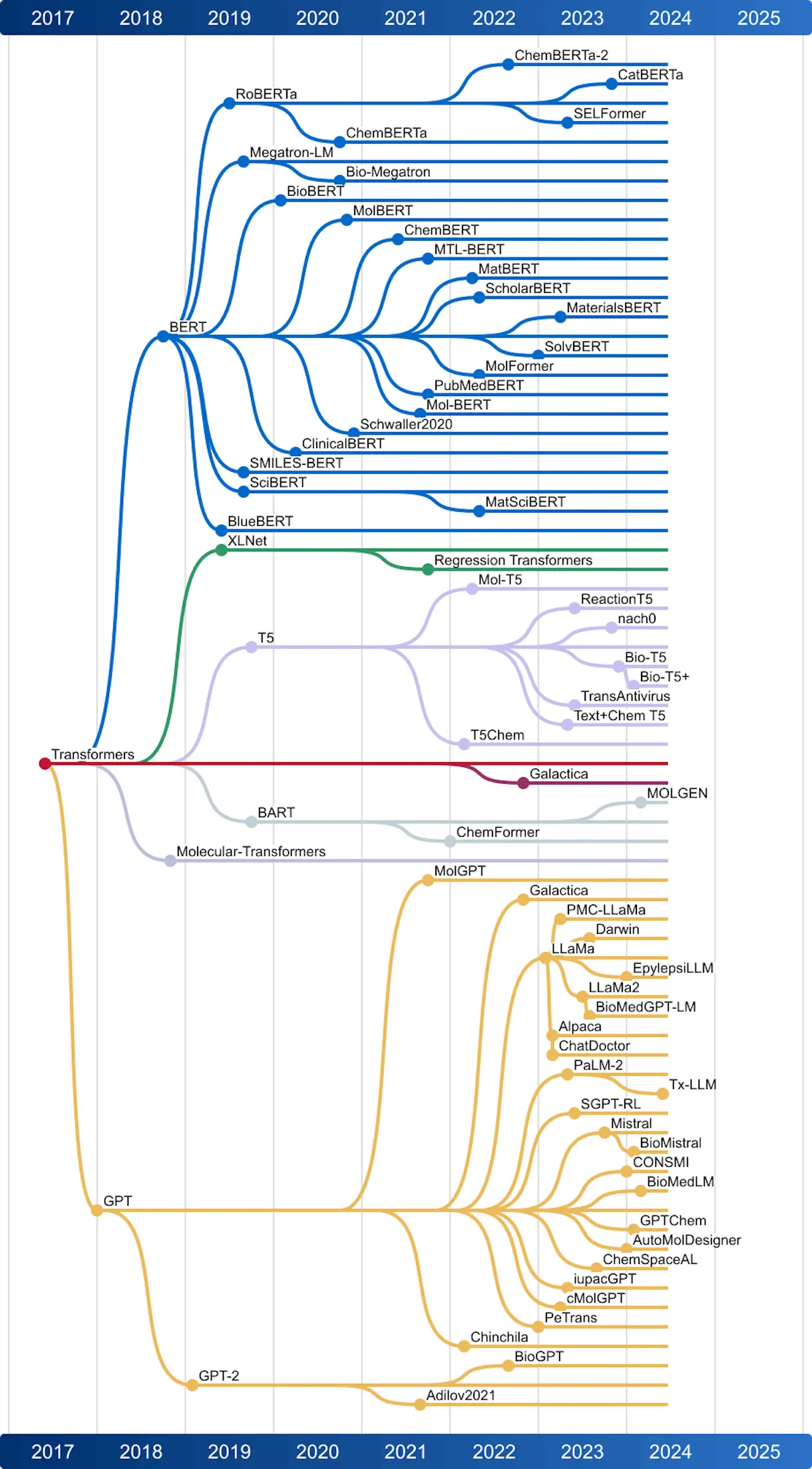

(b) This image shows how different transformer architectures can be used in the field of Chemistry.

Image Source- https://arxiv.org/pdf/2407.01603

Different Transformer Architectures in Chemistry

Encoder-only models:

Bidirectional Encoder Representations from Transformers (BERT) was introduced by Devlin et al. in 2018. BERT utilizes only the encoder component, enabling it to excel in sentence-level and token-level tasks through bidirectional pretraining. This approach allowed BERT to understand the context from both the left and right of a word, using a Masked Language Model (MLM) and Next Sentence Prediction (NSP) to enhance its comprehension of sentence relationships. RoBERTa (Robustly optimized BERT approach) improved upon BERT by training on a larger corpus for more iterations, using larger mini-batches and longer sequences, and focusing solely on the MLM task, which resulted in better model understanding and generalization. These models focus on understanding and classifying data and are used for tasks like property prediction, where the model predicts specific properties of molecules based on their structure.

Decoder-only models:

In June 2018, Radford et al. from OpenAI proposed the Generative Pretrained Transformer (GPT). GPT used a decoder-only, left-to-right unidirectional language model to predict the next word in a sequence based on previous words without an encoder. Unlike the Vaswani Transformer’s decoder, GPT could predict the following sequence, applying general language understanding to specific tasks with smaller annotated datasets. GPT employed positional encodings to maintain word order in its predictions. These models excel at generating sequences from an initial input. They are employed in molecule generation, where the objective is to create new molecular structures with desired properties.

Encoder-decoder models:

These models are designed for tasks that require both understanding input data and generating related outputs. They are commonly used for reaction prediction, translating reactants into products.

Multi-task and multi-modal models:

Capable of handling various tasks and types of data simultaneously, these models are highly versatile tools in chemical research.

Applications of LLMs in Chemistry and Biochemistry

Molecular Property Prediction:

LLMs can predict essential properties of molecules, such as solubility, toxicity, and reactivity. These predictions are crucial for applications like drug discovery and materials science.

Models: Regression Transformer, CatBERTa, SELFormer, ChemBERTa, MolBERT, and SMILESBERT.

Inverse Design:

Inverse design involves generating new molecules with specific desired properties. LLMs can suggest novel molecular structures that meet predefined criteria, significantly speeding up the discovery of new compounds.

Models: BioT5+, Darwin, Text+Chem T5, and MolT5.

Synthesis Prediction:

LLMs can forecast synthesis routes for creating specific molecules, optimizing chemical reactions, and reducing the time and cost associated with experimental synthesis.

Models: ReactionT5, Galactica, ChemFormer, and T5Chem

Multi-modal Models:

These models can process different types of data, such as textual descriptions and molecular structures, enhancing their ability to perform complex tasks in chemistry.

Models: CLAMP, 3MDiffusion, and TSMMG.

Autonomous Agents: The New Idea

The integration of LLMs into autonomous agents represents the next leap in the application of AI in chemistry. These agents combine the predictive power of LLMs with the ability to perform actions based on their predictions, making scientific research more efficient and automated.

Memory Module: This component allows the agent to store and recall past information, improving its ability to make informed decisions.

Planning and Reasoning Modules: These modules enable the agent to plan experiments and reason about outcomes, making it a valuable tool for researchers.

Profiling Module: This module helps identify patterns and correlations in data, aiding in hypothesis generation and testing.

Perception Module: Enhances the agent’s ability to interpret data from various sources, such as scientific literature and experimental results.

Tool Integration: Autonomous agents can integrate with various chemical tools and databases, streamlining the research process.

Challenges and Future Directions

Despite their immense potential, several challenges must be addressed to fully harness the benefits of LLMs and autonomous agents in chemistry.

Data Quality and Availability: High-quality data is essential for training effective models. Ensuring the availability of comprehensive and accurate datasets is crucial.

Ethical Considerations: The use of AI in chemistry raises ethical questions, particularly in areas like drug design and chemical synthesis. Ensuring responsible use of these technologies is paramount.

Scalability: Developing models and agents that can scale to handle the vast chemical space remains a significant challenge.

Human-AI Collaboration: Integrating human expertise with AI capabilities can enhance the effectiveness of these technologies. A human-in-the-loop approach, where AI assists but does not replace human researchers, may be the most effective strategy.

Conclusion

The integration of large language models and autonomous agents into chemistry is poised to revolutionize the field. By leveraging the power of AI, researchers can accelerate scientific discovery, optimize chemical processes, and address global challenges more efficiently. As these technologies continue to evolve, they hold the promise of transforming the landscape of chemical research and innovation. The ongoing development of LLMs and autonomous agents represents a significant step towards a more automated and intelligent future in chemistry.

Article Source: Reference Paper | A repository has been built by the authors to keep track of all the latest advancements: https://github.com/ur-whitelab/LLMs-in-science

Important Note: arXiv releases preprints that have not yet undergone peer review. As a result, it is important to note that these papers should not be considered conclusive evidence, nor should they be used to direct clinical practice or influence health-related behavior. It is also important to understand that the information presented in these papers is not yet considered established or confirmed.

Follow Us!

Learn More:

Neermita Bhattacharya is a consulting Scientific Content Writing Intern at CBIRT. She is pursuing B.Tech in computer science from IIT Jodhpur. She has a niche interest in the amalgamation of biological concepts and computer science and wishes to pursue higher studies in related fields. She has quite a bunch of hobbies- swimming, dancing ballet, playing the violin, guitar, ukulele, singing, drawing and painting, reading novels, playing indie videogames and writing short stories. She is excited to delve deeper into the fields of bioinformatics, genetics and computational biology and possibly help the world through research!