Artificial intelligence is transforming industries across the board, and the life sciences are no exception. A new study led by researchers at Anthropic, an AI safety startup based in San Francisco, published in Nature Biotechnology, demonstrates the immense potential of large language models (LLMs) like ChatGPT to serve as AI assistants for bioinformatics research. The paper presents BioLLMBench, a comprehensive framework for evaluating how well LLMs can perform daily bioinformatics tasks. The results reveal both great promise and current limitations, underscoring the need for responsible development of AI in this complex domain.

As LLMs like ChatGPT, Anthropic’s Claude, and Google’s Bard gain popularity, there are high hopes for their utility in scientific fields. However, their actual capabilities in specialized domains like bioinformatics still need to be proven. This research tackles that knowledge gap through an extensive benchmarking effort. With life sciences poised to be disrupted by AI, empirical insight into the strengths and weaknesses of LLMs is invaluable for researchers anticipating their integration into real-world workflows.

Benchmarking Framework Evaluates Performance Across Bioinformatics Tasks

To thoroughly test LLMs, the researchers designed BioLLMBench to evaluate performance on 36 representative tasks across six key areas:

- Core domain knowledge

- Data visualization

- Coding

- Mathematical problem solving

- Summarizing research papers

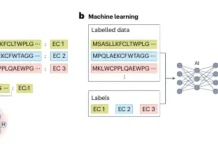

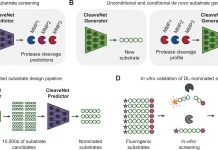

- Developing machine learning models

These tasks aim to mimic the daily challenges faced by bioinformaticians. The benchmark also includes questions ranging from basic concepts to expert-level complexity.

Using this framework, the study tested three leading LLMs – Anthropic’s Claude, Google’s Bard, and Anthropic’s LLaMA – by conducting over 2000 experimental runs. Each model’s response was scored using customized metrics tailored to each task type. For example, domain knowledge answers were judged on accuracy, clarity, and relevance, while coding tasks were assessed on style, correctness, and error handling.

Key Results – LLMs Show Promise Alongside Limitations

The results reveal a mixed picture of LLMs’ readiness to assist bioinformatics research. On straightforward tasks like defining terms and differentiating concepts, all the models achieved high scores, indicating a strong grasp of core biology and genomics knowledge. However, performance varied widely across more complex tasks.

In coding challenges, Claude consistently produced executable scripts and helpful code comments, outperforming LLaMA and Bard. For data visualization, Claude again led, providing complete Python code to generate plots. However, LLaMA and Bard could only describe the steps textually. Bard edged out Claude at mathematical problem solving, while Claude generated more robust machine learning pipelines with sufficient prompting and debugging.

However, major shortcomings emerged. All models struggled to summarize key insights from research papers – reading and understanding research papers is an essential task for bioinformatics researchers. The best ROUGE scores were only around 40%, indicating poor capture of key details and rhetorical structures. The models also required heavy guidance to produce complete machine-learning code, including fixing errors and providing missing steps.

These results confirm that while LLMs have great potential to automate routine bioinformatics tasks, they cannot yet match human judgment for higher-order challenges like summarization and analysis. Their assistance potential strongly depends on the user’s domain expertise to interpret and validate outputs.

Contextual Analysis Reveals Insights on Optimizing LLM Usage

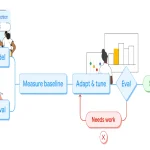

Beyond tasks, the study also tested how using LLMs in different contextual chat environments impacted performance. They compared scores when posing all questions to models within one consistent chat window versus opening new windows for each query.

While average scores were similar, new chat windows increased variability – sometimes producing better responses but also inferior ones compared to sticking with one window. Maintaining chat context appears to make model outputs more stable and predictable.

These findings suggest that bioinformatics newcomers may benefit from a consistent window, while experts can try new windows to spur potential creativity from models while being able to validate unusual outputs.

Current Limitations and Ethical Considerations

Despite their promise, responsible LLM integration in bioinformatics requires acknowledging limitations around biases, privacy, interpretability, and potential misinformation. The researchers emphasize that LLMs should not be viewed as omniscient experts. Users must critically evaluate outputs, especially for higher-order tasks.

They also caution that LLMs need more understanding of complex bioinformatics workflows. Erroneous or oversimplified outputs could mislead non-experts. Users should be transparent about an LLM’s involvement in any work.

Looking Ahead – Guiding Responsible LLM Adoption in Bioinformatics

By empirically demonstrating what LLMs can and cannot currently achieve in bioinformatics, this study provides crucial insights for managing expectations and directing further development. While not yet a silver bullet, LLMs have clear potential to accelerate and enhance bioinformatics research when thoughtfully implemented.

The way forward requires addressing current limitations, expanding rigorous benchmarking to additional models and tasks, and establishing best practices for transparent and ethical LLM integration into real-world applications. With proper guidance and oversight, AI promises to unlock new discoveries that improve human health and scientific understanding.

Story Source: Reference Paper

Important Note: bioRxiv releases preprints that have not yet undergone peer review. As a result, it is important to note that these papers should not be considered conclusive evidence, nor should they be used to direct clinical practice or influence health-related behavior. It is also important to understand that the information presented in these papers is not yet considered established or confirmed.

Learn More:

Dr. Tamanna Anwar is a Scientist and Co-founder of the Centre of Bioinformatics Research and Technology (CBIRT). She is a passionate bioinformatics scientist and a visionary entrepreneur. Dr. Tamanna has worked as a Young Scientist at Jawaharlal Nehru University, New Delhi. She has also worked as a Postdoctoral Fellow at the University of Saskatchewan, Canada. She has several scientific research publications in high-impact research journals. Her latest endeavor is the development of a platform that acts as a one-stop solution for all bioinformatics related information as well as developing a bioinformatics news portal to report cutting-edge bioinformatics breakthroughs.