Building a smart and helpful medical assistant through AI is challenging. The healthcare industry has several obstacles, such as the increasing costs and shortage of skilled employees, and reaching remote locations is difficult. Patients commonly talk to medical assistants for guidance and help these days. They often see them as computer helpers that seem human. The fundamental barrier is a lack of data modalities, which limits a comprehensive patient perspective. The GigaPevt is the first multimodal medical assistant that combines expert medical models with the dialog capabilities of large language models, and it is demonstrated in this study. GigaPevt exhibits an immediate improvement in dialog quality and metric performance, as well as a 1.18% increase in question-answering accuracy.

Introduction

Access to high-quality healthcare remains a major socioeconomic concern in many countries, owing to high costs, a shortage of medical experts, or accessibility challenges in rural places. One possible technological option is to develop intelligent medical aids. Several projects have been launched to develop this type of assistant in the form of symptom checkers. One of the primary disadvantages of these systems is that they are restricted to text-based communication, with patients reporting their symptoms and receiving answers via chat. In this case, the algorithm fails to recognize a significant chunk of the nonverbal context of the doctor-patient dialog. Another issue with these AI assistants is the way survey systems are constructed.

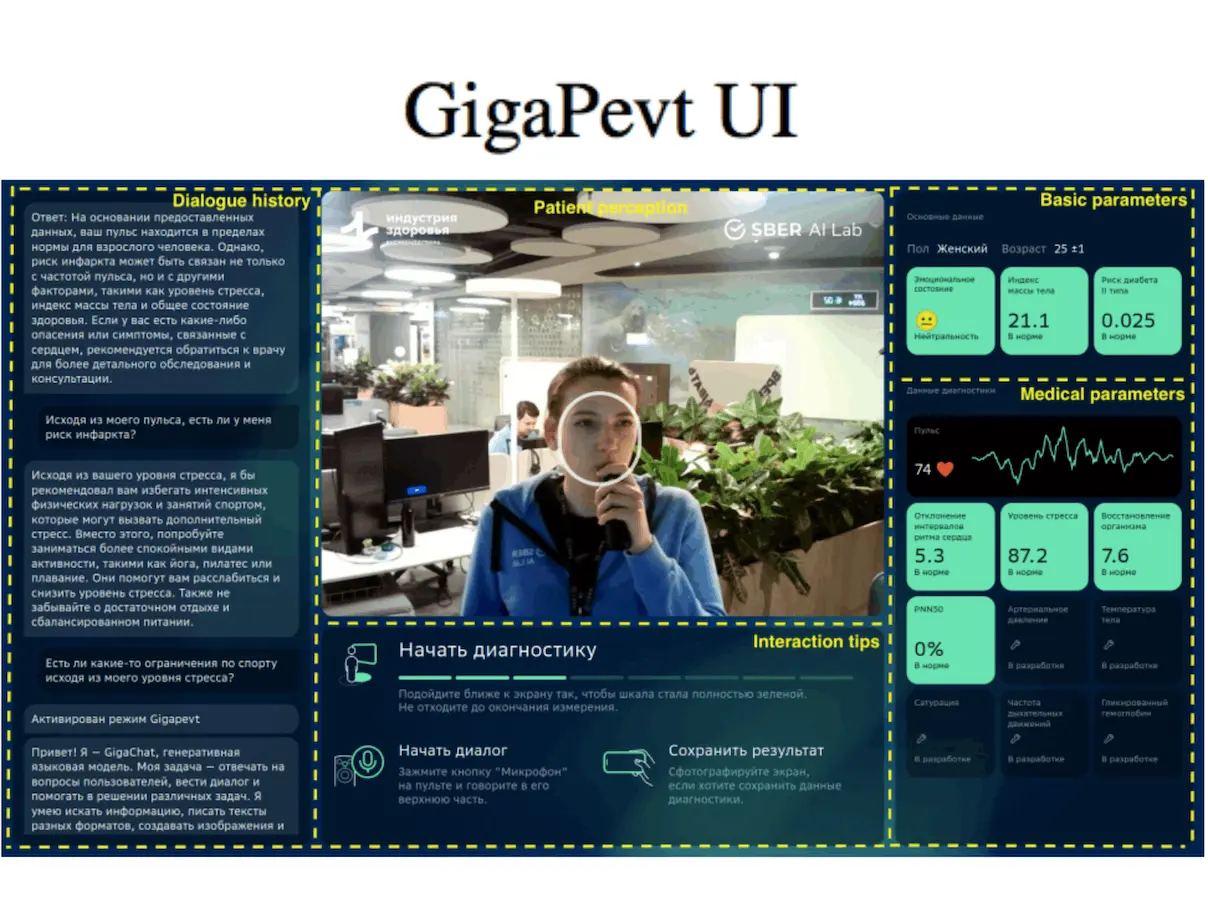

Large language models’ (LLMs) rapid expansion offers fresh chances for creating healthcare assistants of the future. GigaPevt, a unique multimodal medical aid, was introduced by a group of researchers. Combining specialized medical models with LLM’s extensive dialog features, the GigaPevt provides context in text, voice, and visual modes. The previously mentioned problems are addressed, and patient interaction and conversation quality are greatly improved.

The Power of Multimodal Data

GigaPevt sets itself apart by combining the benefits of two powerful AI models:

- Large language models (LLMs): Natural and educational discussions are facilitated by large language models (LLMs), who are conversational experts.

- Specialized medical models: Evidence-based diagnostic data is made possible by the application of specialized models in medicine, which are founded on a deep understanding of medical proficiency and state-of-the-art technology.

GigaPevt has significant advantages over traditional approaches by combining LLMs with specific language models. Some of them are as follows:

- Improved Dialog Quality:

GigaPevt leverages LLMs to give patients calming and engaging chat as well as instructive information.

- Better Task Performance:

As seen by a 1.18% improvement over standard models over traditional models using text data, GigaPevt has been able to answer inquiries more accurately by leveraging scientific models from medical domains.

- Mitigating Data Scarcity:

GigaPevt, unlike other approaches, is unaffected by dataset constraints caused by a lack of data in certain formats. This is owing to its multi-modal strategy, which relies on a variety of data modalities (text, voice, and visuals). This provides the model with more information and allows it to better understand where the users are, even in situations where more conventional text-based methods could find it difficult.

A Closer Look at GigaPevt’s Architecture

The architecture of GigaPevt is client-server-based. To offer minimal latency and a consistent user experience, the client uses lightweight models for face detection, text-to-speech, and speech recognition. Developers used a rich client written in Python to run lightweight models that require low latency for a comfortable user experience. These are the face detector, Text-to-Speech (TTS), and Automatic Speech Recognition (ASR) API services. Client logic handles data flow, client state management, and network interactions with the rest of the system.

They also employed Flask as the server-side back-end framework. The GP Dialog Logic component, specific models, and a model manager are all part of the server that handles the patient-assistant interface.

Multimodal Analysis with Specialized Models

GigaPevt uses a wide range of specialized models to evaluate different kinds of data, giving a more comprehensive picture of the user’s state:

Video-based Facial Analytics:

- User identification: Pre-trained face recognition algorithms reliably identify 98.19% of users. This allows for tailored interaction and eliminates the need for regular user verification.

- Sociodemographic prediction: This strategy trains models to predict characteristics such as age, gender, and ethnicity using pre-specified datasets (for example, VGGface2). The content communication style can be tailored according to the user’s demographics by using this information.

- rPPG Model: The rPPG Model uses face films to extract health indicators such as heart rate using the Plane Orthogonal-to-Skin (POS) method. This allows vital signs to be continuously monitored without the use of additional wearable equipment.

- Facial expression recognition: It identifies emotions with an accuracy of 61.93% using the EfficientNet-B0 model, which was trained on the AffectNet dataset. The assistant can respond to the user more appropriately and sympathetically when they are aware of their emotional condition.

- Body Mass Index (BMI) estimation: Reddit and the FIW-BMI dataset were used to train the ResNet34 model (MSE 4) that is used to predict BMI from face images. This non-invasive method provides a first approximation of the user’s weight.

GP Dialog Logic:

- Retrieval-Augmented Generation (RAG): Retrieval-Augmented Generation (RAG) improves answer quality by providing relevant context to user queries, hence increasing the model’s comprehension.

- Chain of Thoughts (CoT): By explicitly articulating the procedures taken to arrive at a response, CoT enhances performance and answer quality in health-related tasks while also encouraging more open and dependable interactions.

Evaluation and Future Directions

When GigaPevt was examined using the RuMedBench benchmark, scientists discovered that it significantly outperformed baseline models—including human assessment—in responding to questions. This illustrates how well the multimodal method works to provide patients with accurate and perceptive responses to their inquiries.

Looking ahead, the authors aim to:

- Advanced LLM knowledge management: Enhance the model’s focus and understanding of the medical field by utilizing techniques like Advanced and Modular RAG. This will enable the model to respond to complex medical inquiries more skillfully and accurately.

- Electronic health record integration: Establish a connection with patient EHRs to improve context awareness and respond to particular inquiries on the patient’s medical background. The assistant may customize its responses and suggestions based on the user’s unique medical profile based on this connection.

Video Source: https://youtu.be/yETMJqIThPE?si=R6pnILKnMaR-Edv0

Conclusion

GigaPevt’s multimodal approach holds immense promise for producing intelligent medical assistants that encourage natural interactions and give a more comprehensive understanding of patients. To properly represent the medical industry, researchers want to improve their LLM model by adding contemporary knowledge management techniques, such as current and modular RAG. Their methodology entails a more profound amalgamation with the patient’s electronic health record to enhance context awareness and tailor questions specifically.

As research progresses, this technology may revolutionize healthcare by improving patient-centered, high-quality, and accessible care.

Article source: Reference Paper

Important Note: arXiv releases preprints that have not yet undergone peer review. As a result, it is important to note that these papers should not be considered conclusive evidence, nor should they be used to direct clinical practice or influence health-related behavior. It is also important to understand that the information presented in these papers is not yet considered established or confirmed.

Follow Us!

Learn More:

Anchal is a consulting scientific writing intern at CBIRT with a passion for bioinformatics and its miracles. She is pursuing an MTech in Bioinformatics from Delhi Technological University, Delhi. Through engaging prose, she invites readers to explore the captivating world of bioinformatics, showcasing its groundbreaking contributions to understanding the mysteries of life. Besides science, she enjoys reading and painting.