In a recent study, scientists from Tsinghua University, China, introduced GeneFormer, a unique system for compressing gene sequences. The technique uses transformers to make compression of DNA sequences more efficient than any other previous methods, which brings about smaller files and faster processing times, thus making it possible to handle this ever-increasing pile of genetic data better.

Introduction

The human body is an incredible device for storing information. Every cell contains a full set of instructions, which are DNA items that are encoded in a sequence of billions of nucleotides. These blocks of chemical matter, often referred to as A, C, G, and T, respectively, may be called the language with which life expresses itself and creates proteins as well as other necessities.

We can now decode this genetic code faster and more precisely than ever before due to improvements in DNA sequencing technology. However, the cost of this development is so high that it requires large data storage. For example, a single human genome might take up gigabytes, but as researchers sequence more genomes to fight diseases, there is going to be too much information. This is where data compression comes in handy. Like compressing a folder full of documents into your computer, these procedures reduce the amount of storage space required for genetic information. But compressing genetic information walks a tightrope. We need effective processes (size reduction) that do not compromise precision (preserving initial genetic code).

GeneFormer: Transformers for Gene Compression

What is a Transformer?

Transformers are types of neural networks that have transformed natural language processing (NLP). They have a remarkable ability to capture inter-word relationships in sentences, making them suitable for applications such as machine translation and text summarization.

Accordingly, the researchers behind Gene Former adapted the transformer architecture specifically for the gene compression challenge.

This is how it goes:

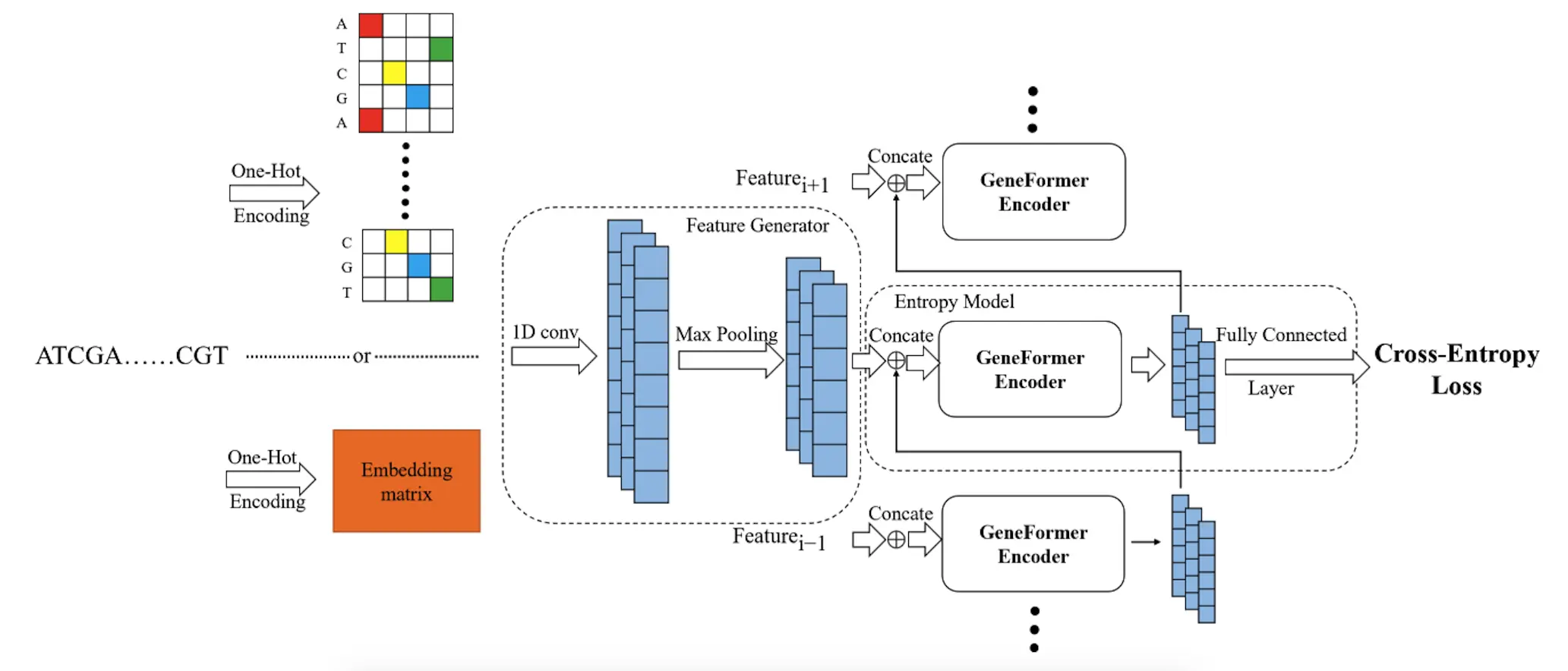

Feature Extraction: The first step involves extracting informative patterns from the DNA sequence. This feature selection process is done by convolutional neural network layers, as used in GeneFormer, similar to image recognition algorithms that identify edges and shapes in pictures.

Context Modeling with Transformers: The GeneFormer transformer-based model is the heart of this. In contrast to traditional types of compression focusing on each nucleotide separately, transformers are capable of analyzing the entire sequence and thus understanding how nucleotides in it relate to one another. As a result, GeneFormer can exploit innate redundancies and patterns found within DNA strands, leading to more effective compression.

Multi-Level Grouping for Speed: However, transformers tend to consume great computational resources; hence, they may be effective but not efficient. Therefore, researchers have come up with multi-level grouping to solve this problem. Speaking, it breaks down a long string into smaller parts, which are then processed parallelly, speeding up the compressing or decompressing processes.

Lossless Compression: The ultimate thing here is dynamic arithmetic coding that assigns probabilities to each nucleotide in the sequence, meaning that when decompressed, the DNA string remains an exact copy of itself (lossless).

GeneFormer’s Advantages

The researchers compared the performance of GeneFormer with different gene-compression techniques on various datasets.

Here’s what they found:

- Great Compression Ratio: As a consequence, it was capable of storing more genetic information in limited space than early methods.

- Speedier Decoding: Unlike traditional learning compression methods that are time-consuming, its multi-level grouping approach increased efficiency in decompressing data.

- Generalization Capability: This enables the algorithm to perform well even if trained on different organisms’ data.

The Future of Gene Compression

GeneFormer is a major stride in gene compression. It certainly offers an alternative to dealing with growing amounts of genetic data. This system can utilize the power and novelty of transformers and creative groupings applied to such compression techniques.

Nonetheless, the researchers acknowledged that there are still some improvements to be made. In this regard, future research could concentrate on developing more efficient dependency modeling approaches that would enhance the compressing and decompressing process even more. Moreover, trying out model architectures plus training methods specifically tailored for DNA compression may unlock additional benefits.

Join the Conversation

Developing efficient gene compression tools is crucial to enhancing our understanding of genetics and unleashing potential in personalized medicine. By utilizing a transformer-based method, GeneFormer opens up new avenues through which huge repositories containing genetic data can be stored, analyzed, and used strategically.

In conclusion, this area keeps changing; scientists from around the world are exploring new avenues regarding gene compression possibilities.

Give your opinion about GeneFormer, kindly add your comments below – let’s chat!

Article Source: Reference Paper | GeneFormer code will be released on GitHub once the paper is accepted.

Important Note: arXiv releases preprints that have not yet undergone peer review. As a result, it is important to note that these papers should not be considered conclusive evidence, nor should they be used to direct clinical practice or influence health-related behavior. It is also important to understand that the information presented in these papers is not yet considered established or confirmed.

Follow Us!

Learn More:

Anchal is a consulting scientific writing intern at CBIRT with a passion for bioinformatics and its miracles. She is pursuing an MTech in Bioinformatics from Delhi Technological University, Delhi. Through engaging prose, she invites readers to explore the captivating world of bioinformatics, showcasing its groundbreaking contributions to understanding the mysteries of life. Besides science, she enjoys reading and painting.