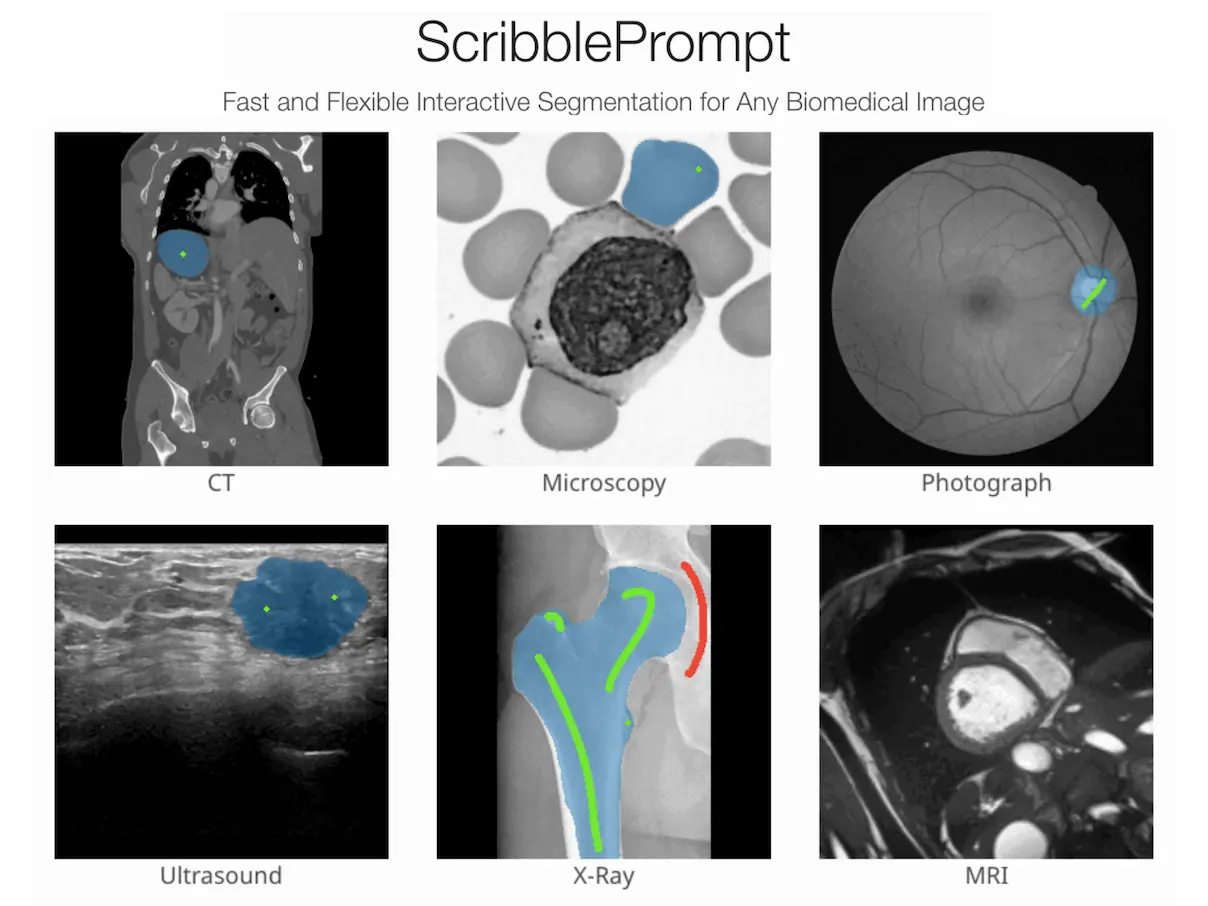

Clinical care as well as scientific research depend heavily on biomedical image segmentation. Certain biomedical picture segmentation tasks can be accurately automated by deep learning models when they are trained with a sufficient amount of tagged data. Still, it takes a lot of work and domain knowledge to manually segment images for training data. Researchers introduce ScribblePrompt, an interactive segmentation tool for biomedical imaging based on a modular neural network that allows human annotators to use bounding boxes, clicks, and scribbles to segment previously undiscovered objects. For accurate segmentations on unknown datasets during training, ScribblePrompt performs better than earlier approaches. Comparing it to the next best method, it improves Dice by 15% and decreases annotation time by 28%. ScribblePrompt’s success can be ascribed to its combination of unique user interaction simulation methods, a quick inference network that uses a variety of tasks and images, and a diversified training strategy.

Introduction

A crucial stage in many clinical care and biomedical research workflows is biomedical image segmentation. Nowadays, deep learning is the go-to technique for automating segmentation jobs that already exist. Researchers and practitioners in the biomedical field frequently come into novel segmentation problems that include either new picture modalities or new regions of interest. Sadly, accurate models for new domains need to be trained and supervised using a variety of photos and meticulous annotations from knowledgeable professionals.

Minimal, intensity-based algorithmic support is provided by the most commonly used interactive segmentation systems for biomedical imaging. Although the literature on learning-based systems is expanding, their practical use is still rather limited. This could be due to the fact that these systems are typically tailored to certain tasks or modalities, which restricts their scope. While they aim for wide use, recent vision foundation models need to be adjusted for the medical field. While most models of interactive segmentation are simple to build, they are challenging to apply in real-world scenarios because they require specific interactions, such as precisely positioned clicks. The current models that are based on learning are not commonly employed in real-world scenarios due to these drawbacks.

Understanding ScribblePrompt

ScribblePrompt is a novel method for segmentation in biomedical image analysis, designed to be practical and useful for clinicians and researchers. Researchers show via rigorous quantitative studies that ScribblePrompt outperforms earlier approaches on datasets not encountered during training in terms of segmentations when given equivalent quantities of interaction. In a user study conducted with subject matter experts, ScribblePrompt improved Dice by 15% over the next best approach and cut annotation time by 28%. The success of ScribblePrompt depends on a number of thoughtful design choices. Among these are unique algorithms for simulated user interactions and labels, a network that facilitates quick inference, and a training technique that combines a very diversified set of images and activities.

Key Features of ScribblePrompt

- Surpasses current state-of-the-art algorithms in terms of speed and accuracy for any biomedical image segmentation job, especially for unknown labels and picture kinds.

- It is adaptable to various annotation formats, including clicks, scribbles, and bounding boxes.

- It is quick to compute, even with a single CPU, allowing for quick inference.

Training of ScribblePrompt

For 65 datasets, which included scans of the eyes, thorax, spine, cells, skin, abdominal muscles, neck, brain, bones, teeth, and lesions, ScribblePrompt was trained using simulated scribbles and clicks on 54,000 photos. Sixteen different kinds of medical imaging, such as microscopies, CT scans, X-rays, MRIs, ultrasounds, and photos, were presented to the model for familiarisation.

Performance of ScribblePrompt

In order to mimic user interactions when scribbling across photos, the model takes advantage of artificial segmentation tasks. Following training on a variety of data sets, ScribblePrompt outperformed four alternative approaches by improving segmentation efficiency and yielding more precise predictions regarding the areas that users desired to be highlighted. Since segmentation is an important and significant stage in both ordinary clinical practice and research, this technique is especially helpful. Research professionals will find ScribblePrompt to be a useful tool as its design strives to make this process much faster and more efficient for users.

Human annotation of images is a major component of segmentation algorithms used in image analysis and machine learning. However since 3D volumes are frequently used in medical imaging, this is very difficult. It is not a natural skill for humans to annotate 3D images. A machine learning technology called ScribblePrompt trains a network on human interactions to enable faster and more accurate manual annotation. Annotators can engage with imaging data more naturally because of this user-friendly interface, which boosts output and efficiency. Since 3D images are usually utilized in medical imaging, this method is especially advantageous in that regard.

Conclusion

Researchers offer ScribblePrompt, a useful interactive segmentation framework that allows users to use bounding boxes, clicks, and scribbles to segment a variety of medical images. Researchers provide techniques for creating artificial labels and modeling naturalistic user interactions. By using these techniques, and can train models that can be applied to datasets and segmentation tasks that have never been seen before. In comparison to current baselines, ScribblePrompt is more accurate, and even when using a CPU, ScribblePrompt-UNet is computationally efficient. According to the user analysis, almost all users choose ScribblePrompt and segment data with 15% greater dice while requiring less work than the next most accurate baseline. The workload associated with manual segmentation in biomedical imaging should be greatly reduced because of ScribblePrompt.

Article Source: Reference Paper | Reference Article | Link to access dataset of scribble annotations: https://scribbleprompt.csail.mit.edu

Important Note: arRxiv releases preprints that have not yet undergone peer review. As a result, it is important to note that these papers should not be considered conclusive evidence, nor should they be used to direct clinical practice or influence health-related behavior. It is also important to understand that the information presented in these papers is not yet considered established or confirmed.

Follow Us!

Learn More:

Deotima is a consulting scientific content writing intern at CBIRT. Currently she's pursuing Master's in Bioinformatics at Maulana Abul Kalam Azad University of Technology. As an emerging scientific writer, she is eager to apply her expertise in making intricate scientific concepts comprehensible to individuals from diverse backgrounds. Deotima harbors a particular passion for Structural Bioinformatics and Molecular Dynamics.